I have been writing a chess engine with a friend and the engine itself is really good already (2700+ CCRL). We had the idea to use a neural network to have a better evaluation of positions.

Input to the network

because the output of the network greatly depends on which side has to move, we use the first half of the inputs to parse the position of who has to move and the second half for the opponent. In fact, we have for each piece and for each square an input which would result in 12x64 inputs. We had the idea to also include the opponent king position. So each side had 6x64 inputs and this for each square the opponent king can be -> 6x64x64. In total, this results in 12x64x64 binary input values where at maximum 32 are set.

Layers

The next layer consists of 64neurons where the first 32 neurons only accept inputs from the first half of the input features and the last 32 only accept inputs from the second half of the input features.

It follows a layer with 32 neurons fully connected and the output layer has only a single output.

Activation function

We use LeakyReLU at both hidden layers and a linear activation function at the output.

Training

Initially, I wanted to train the network on about 1 million positions yet this is taking ages. The position itself has a target value in the range of -20 to 20. I am using stochastic gradient descent using ADAM with a learning rate of 0.0001 and MSE as the loss function.

The problem I have is that this is taking a very very long time to even train those 1 million positions. The target is to later train on 300M positions.

I am not sure where I could improve the training progress.

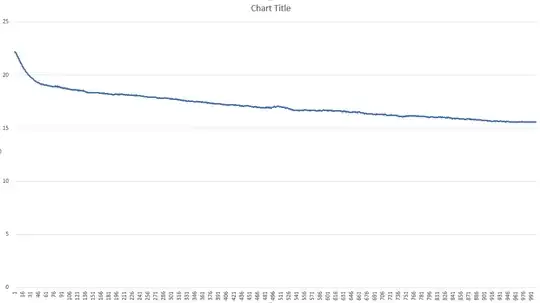

Below are the graphs which show the training progress over 1000 iterations

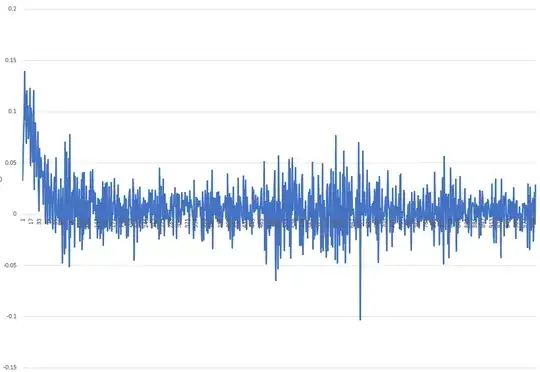

The change for each iteration looks like this:

I hope someone could give me one or two hints on what I could improve in order to train the network faster. I am very happy for any advice!

Greetings, Finn

Edit 1

As suggested, I should convert my network to keras. I am having problems getting the sparse input to run.

import keras

from keras.layers import Input, Concatenate, Dense, LeakyReLU

from keras.models import Model

from keras import backend as K

import numpy as np

# trainX1 = tf.SparseTensor(indices=[[0,0], [0,1]], values=[1, 2], dense_shape=[1,24576])

# trainX2 = tf.SparseTensor(indices=[[0,0], [0,1]], values=[1, 2], dense_shape=[1,24576])

#

# trainY = np.random.rand(1)

trainX1 = np.random.random((10000,24576))

trainX2 = np.random.random((10000,24576))

trainY = np.zeros((10000,1))

#input for player to move

activeInput = Input((64*64*6,))

inactiveInput = Input((64*64*6,))

denseActive = Dense(64)(activeInput)

denseInactive = Dense(64)(inactiveInput)

act1 = LeakyReLU(alpha=0.1)(denseActive)

act2 = LeakyReLU(alpha=0.1)(denseInactive)

concat_layer= Concatenate()([act1, act2])

dense1 = Dense(32)(concat_layer)

act3 = LeakyReLU(alpha=0.1)(dense1)

output = Dense(1, activation="linear")(act3)

model = Model(inputs=[activeInput, inactiveInput], outputs=output)

model.compile(loss='mse', optimizer='adam', metrics=['accuracy'])

# print(model.summary())

print(model.fit([trainX1,trainX2], trainY, epochs=1))

If I use sparse=True for the Dense layer, it will throw some exceptions. I am happy if someone could help me creating sparse input vectors.