Consider a linear regression (based on least squares) on two predictors including an interaction term: $$Y=(b_0+b_1X_1)+(b_2+b_3X_1)X_2$$

$b_2$ here corresponds to the conditional effect of $X_2$ when $X_1=0$. A common mistake is to understand $b_2$ as being the main effect of $X_2$, i.e. the average effect of $X_2$ over all possible values of $X_1$.

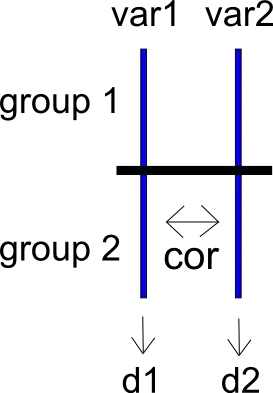

Now let's assume that $X_1$ was centered, that is $\overline{X_1}=0$. It becomes now true that $b_2$ is the average effect of $X_2$ over all possible values of $X_1$, in the sense that $\overline{b_2+b_3X_1}=b_2$. In such conditions, the meaning given to $b_2$ is nearly indistinguishable from the meaning that we would give to the effect of $X_2$ in a simple regression (where $X_2$ would be the only variable, let's call this effect $B_2$).

In practice, it seems that $b_2$ and $B_2$ are reasonably close to each other.

Question:

Are there any "common knowledge" examples of situations where $B_2$ and $b_2$ are remarkably far from each other?

Are there any known upper bounds to $|b_2-B_2|$?

Edit (came after @Robert Long's answer):

For the record, a very rough calculation of what the difference $|b_2-B_2|$ might look like.

$B_2$ can be computed via the usual covariance formula, giving $$B_2=b_2+b_3\dfrac{Cov(X_1X_2,X_2)}{Var(X_2)}$$ The last fraction is roughly distributed like the ratio of two normal variables, $\mathcal N(\mu,\frac{3+2\mu^2}{\sqrt N})$ and $\mathcal N(0,\frac{2}{\sqrt N})$ (not independent, unfortunately), assuming that $X_1\sim \mathcal N(0,1)$ and $X_2\sim \mathcal N(\mu,1)$. I've asked a separate question to try to circumvent my limited calculation skills.