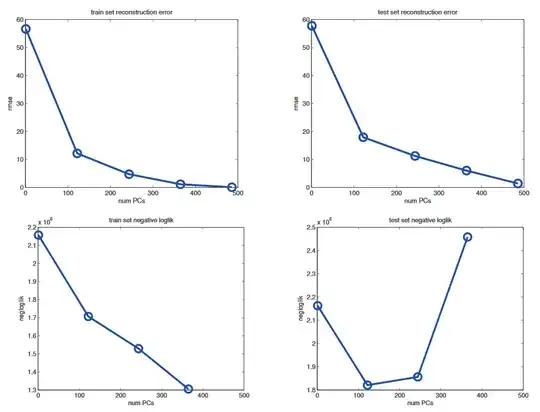

I am working through the book "Machine Learning: A Probabilistic Perspective". After introducing PCA and Probabilistic PCA, the following graphic is shown (the upper two graphics correspondend to PCA and the lower two to PPCA, rmse = root mean squared error, all plots visualize the reconstruction error):

The arising question is:

Why has PCA not the typical Bias-Variance-Trade off U-Shape, but PPCA does?

The explanation in the book is the following:

The problem is that PCA is not a proper generative model of the data. It is merely a compression technique. If you give it more latent dimensions, it will be able to approximate the test data more accurately. By contrast, a probabilistic model enjoys a Bayesian Occam’s razor effect (Section 5.3.1), in that it gets “punished” if it wastes probability mass on parts of the space where there is little data. (i.e. one should pick the simplest model that adequately explains the data.)

Summing up and my question:

I think why PCA does not have a U-Shape is clear. The latent variables are the number of eigenvectors we consider. The more we take, the better we approximate the data. So no magic is done.

However, I don't manage to fully understand the behavior of PPCA. I thought that PPCA almost equals PCA if the noise $\sigma$ of the data vanishes. So I don't understand why there is then such a different behavior?

Thanks in advance if someone could explain this in detail! :)