I am trying to perform a normality test to multiple continuous values before doing an anova test. The p-value I am getting for the data does not make much sense and I want to make sure I am not missing something.

- My data consists of 40k rows, I cannot use

scipy.stats.shapiroso I am usingkstest - When doing a

shapirotest I believe theWvalue has to be close to 1. Does the same apply toD statisticvalue? - most p-values are 0.0 which makes me think I am missing something.

- What values from the kstest will render the anova results valid?

- Should I be using

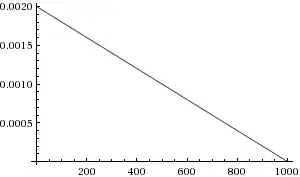

Anderson-Darling testgiven that the data is not normally distributed? if so would it still count as a normality test? - I tried converting some columns from lognorm to norm by doing

df['income'] = df['income'].apply(lambda x: math.log10(x))that seem to result inp-valuesthat approach zero. but I am not sure if that's the right method. if it is, should the anova analyze thelog(income)as well or it does not matter?

here is the code I used to do the test:

from scipy.stats import norm, kstest

for var in numerical_features:

loc, scale = norm.fit(df[var].to_numpy())

n = norm(loc=loc, scale=scale)

d, p = kstest(df[var].to_numpy(), cdf=n.cdf)

print("{0} {1} {2}".format(var, d, p))

Here is the data itself:

age: D=0.054 p=9.488e-84

income: D=0.142 p=0.0

vehicles owned: D=0.409 p=0.0

years of experience: D=0.175 p=0.0