There seems to be some confusion about what the delta methods really says.

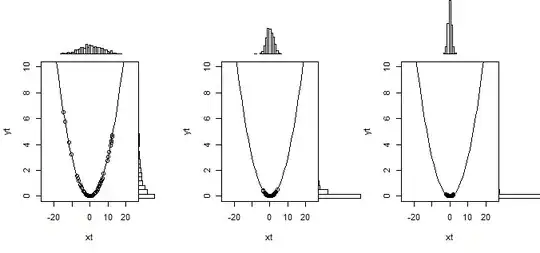

This statement is fundamentally about the asymptotic distribution of the function of an asymptotically normal estimator. In your examples, the functions are defined on $X$, which as you note could follow any distribution! The classic Delta method is fundamentally a statement about the asymptotic distribution of functions of an estimator that is asymptotically normal (which in the case of the sample mean is ensured by the CLT for any $X$ that satisfies the assumptions of CLT). So one example could be $f(X_n) = X_n^2 = \bigg(\frac{1}{n}\sum_i X_i\bigg)^2$. The Delta method says that if $X_n$ follows a normal distribution with mean $\theta$, then $f(X_n)$ also follows a normal distribution with mean $f(\theta)$.

To explicitly answer your scenario where $g(X_n) = X_n^2$, the point is that $g(X_n)$ is not chi square. Suppose we draw $X_i$ iid from some distribution, and suppose that $Var(X_i) = 1$. Let's consider the sequence $\{g(X_n)\}_n$, where $g(X_n) = X_n^2 = \bigg(\frac{1}{n}\sum_i X_i\bigg)^2$. By the CLT, we have that $\sqrt{n}(X_n - \mu) \xrightarrow{d} N(0,1)$ (or, in your post, you just automatically get that distribution without needing to appeal to the CLT). But $X_n^2$ is not Chi-square, because $X_n$ is not standard normal. Instead, $\sqrt{n}(X_n - \mu)$ is standard normal (either by assumption of distribution of $X_n$ or by the CLT) and we accordingly have that

$$\big(\sqrt{n}(X_n - \mu)\big)^2 \xrightarrow{d} \chi^2$$

But you're not interested in the distribution of whatever that is. You're interested in the distribution of $X_n^2$. For the sake of exploring, we can think about the distribution of $X_n^2$. Well if $Z\sim N(\mu,\sigma^2)$, then $\frac{Z^2}{\sigma^2}$ is a scaled non-central chi square distribution with one degree of freedom and non-central parameter $\lambda = (\frac{\mu}{\sigma})^2$. But in your case (either by your assumption or by CLT), we have that $\sigma^2 = 1/n$, and so $nX_n^2$ follows a non-central chi square distribution with $\lambda = \mu^2n$ and so $\lambda \to \infty$ as $n\to\infty$. I won't go through the proof, but if you check the wiki page I linked on non central chi square distributions, under Related Distributions, you'll note that for $Z$ noncentral chi with $k$ degrees of freedom and non central parameter $\lambda$, as $\lambda \to \infty$ we have that

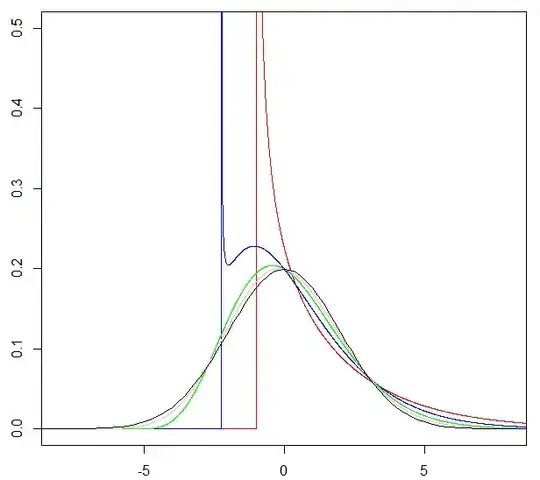

$$\frac{Z - (k+\lambda)}{\sqrt{2(k+2\lambda)}} \xrightarrow{d} N(0,1) $$

In our case, $Z = nX_n^2,\lambda = \mu^2n,k = 1$, and so we have that as $n$ goes to infinity, we have that

$$\frac{nX_n^2 - (1+\mu^2n)}{\sqrt{2(1+2\mu^2n)}} = \frac{n(X_n^2 - \mu^2)

- 1}{\sqrt{2+4\mu^2n}} \xrightarrow{d} N(0,1)$$

I won't be formal, but since $n$ is getting arbitrarily large, it's clear that

$$\frac{n(X_n^2 - \mu^2) - 1}{\sqrt{2+4\mu^2n}} \approx \frac{n(X_n^2 - \mu^2)}{2\mu\sqrt{n}} = \frac{1}{2\mu}\sqrt{n}(X_n^2 - \mu^2)\xrightarrow{d} N(0,1) $$

and using normal properties, we thus have that

$$\sqrt{n}(X_n^2 - \mu^2)\xrightarrow{d} N(0,4\mu^2) $$

Seems pretty nice! And what does Delta tell us again? Well, by Delta, we should have that for $g(\theta) = \theta^2$,

$$\sqrt{n}(X_n^2 - \mu^2)\xrightarrow{d} N(0,\sigma^2 g'(\theta)^2) = N(0,(2\theta)^2) = N(0,4\mu^2)$$

Sweet! But all those steps were kind of a pain to do.. luckily, the univariate proof of the delta method just approximates all this using a first order taylor expansion as in the wiki page for Delta and it's just a few steps after that. From that proof, you can see that all you really need is for the estimator of $\theta$ to be asymptotically normal and that $f'(\theta)$ is well-defined and non-zero. In the case where it is zero, you can try taking further order taylor expansions, so you may still be able to recover an asymptotic distribution.