If I have 20 variables, should I do a pair-wise correlation check before building a classification model with Logistic Reg, Decision Tree or Random Forest ? Multicolinearity is a problem in regression. Is it a problem in classification as well ? Besides classifying, I also need to understand the influence of variables as well. The post Should one be concerned about multi-collinearity when using non-linear models? has conflicting answers.

-

2What is the goal of doing the modeling ? – Dave Jul 05 '20 at 16:06

-

3To spoiler what @Dave is getting at, the main problem with colinearity is in the interpretation of learned coefficients after building the model. Addition of a colinear variable won't really affect the accuracy of the model, but interpreting the learned coefficients to determine which variable has how much influence on the dependent variable becomes impossible. – Him Jul 05 '20 at 16:11

-

1Does this answer your question? [Won't highly-correlated variables in random forest distort accuracy and feature-selection?](https://stats.stackexchange.com/questions/141619/wont-highly-correlated-variables-in-random-forest-distort-accuracy-and-feature) also this search for the [tag:random-forest] component https://stats.stackexchange.com/search?tab=votes&q=collinear%20%5brandom-forest%5d and https://stats.stackexchange.com/search?tab=votes&q=collinear%20logistic for the [tag:logistic] component. – Sycorax Jul 05 '20 at 16:45

-

@Dave: The goal is to do a classification. But also understand the importance of variables in the classification. – learner Jul 05 '20 at 16:50

-

@Scott: Yes it would be important to understand the influence of the variables. – learner Jul 05 '20 at 16:51

-

We also have a nice description of best practices for searching here: https://stats.meta.stackexchange.com/questions/5549/best-practices-for-searching-cv – Sycorax Jul 05 '20 at 17:04

2 Answers

I'm going to assume that by multicollinearity you mean a perfect linear correlation between predictors, and the goal of the modelling task is prediction (not causal inference), because that's my first guess based on the wording of your question.

In that case, multicollinearity may not be a problem in decision tree or random forest, even if it is a problem in regression. The reason is that decision trees and random forests select variables one at a time. And even if there are multiple variables that are equally good (e.g. same amount of information gain), the algorithm can simply choose randomly between them.

However, if the goal is causal inference (or estimating the strength of correlation between individual predictors and the dependent variable itself), then multicollinearity could be a problem. Because variables could be perfect linear combinations of each other, when one variable is chosen for a tree split, it doesn't mean that the other variables were weaker predictors. It could just be that the variable was chosen randomly among multiple variables that had equal predictive power.

- 668

- 1

- 6

- 17

-

@wwI Although I am trying to classify based on 20 variables, it is equally important for me to know the level of influence of these variables in order to reap the benefits of the classification itself. In this case, I should be concerned about colinearity ? Also what degree of colinearity is concerning ? – learner Jul 05 '20 at 17:12

-

Your comment seems to indicate you're concerned about imperfect multicollinearity. Yes, I would be concerned about the degree of collinearity in that case. There is no hard and fast rule, especially for nonlinear models like random forest, but for linear models I have heard a Variance Inflation Factor of >5 as a rule of thumb for determining whether imperfect multicollinearity is a problem. – wwl Jul 05 '20 at 18:56

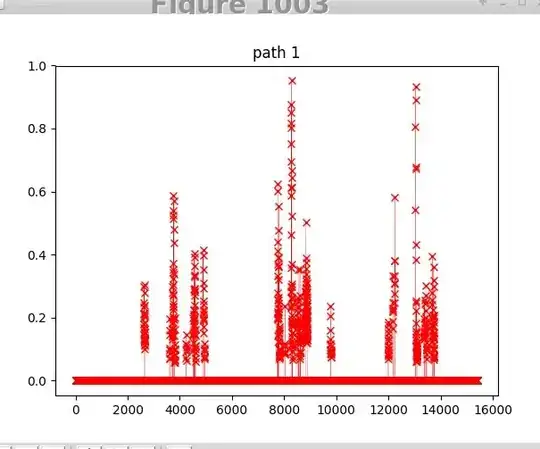

In Logistic Regression, collinearity inflates the uncertainty in the learned estimates. Here is an example. I'll simulate 1000 datasets from the same phenomenon and fit a model. Then, I will plot the estimates of that model under the assumption that the features are independent and that they have correlation of 0.8

sim<-function(corr=F){

if(!corr){

s = diag(2)

}

else{

s = matrix(c(1,0.95, 0.95, 1), byrow = T, nrow = 2)

}

X = MASS::mvrnorm(n=100, rep(0,2), s)

X = cbind(rep(1,100),X)

p = plogis(X %*% c(-0.8, 0.1, 0))

y = rbinom(100,1,p)

model = glm(y~X[,2] + X[,3], family = 'binomial')

coef(model)

}

nocor = t(replicate(1000,sim(F)))

cor = t(replicate(1000,sim(T)))

plot(nocor[,2], nocor[,3])

plot(cor[,2], cor[,3])

Note the scale in the subplots. With correlation, the estimates become more extreme. Note also that the estimates are negatively correlated; if one is large, the other is necessarily small. That is because they are fighting with one another to have an impact on the outcome.

For decision trees, the correlation is likely to have a very large impact on predictions but only because the estimator is very high variance. Small changes in the data can lead to large changes in the predictions. Random forests are an attempt to correct for this, and correlation in the parameters is less of a problem. Because random forests allow the user to a) bootstrap the data to build a model and b) randomly select a subset of predictors to create the split, the correlation between features (and subsequently between trees in the forest) is combated. It would still be difficult to determine feature importance by say looking at split frequency or loss reduction when split on the feature. My intuition says that since the features are correlated, they would have similar feature importance, but this requires empirical validation.

- 24,380

- 1

- 36

- 94