Interesting questions posed. I will address the two questions for the use case of statistical classifiers in order to demarcate the analysis to a model domain we can oversee.

Before embarking onto an elaborate answer I do want to discuss the definition of Robustness. Different definitions have been given for the concept of robustness. One can discuss model robustness - as opposed to outcome robustness. Model robustness means that your general model outcome - and hence the distribution of its predictions - that they are less sensitive or even insensitive to an increasing amount of extreme values in the training set. Outcome robustness, on the other hand, refers to the (in)sensitivity to increasing noise levels in the input variables with respect to one specific predicted outcome. I assume that you address model robustness in your questions.

To address the first question, we need to make a distinction between classifiers that use a global or local distance measure to model (probability of) class dependency, and distribution-free classifiers.

Discriminant analysis, k-nearest neighbor classifier, neural networks, support vector machines - they all calculate some sort of distance between parameter vectors and the input vector provided. They all use some sort of distance measure. It should be added that nonlinear neural networks and SVMs use nonlinearity to globally bend and stretch the concept of distance (neural networks are universal approximators, as proved and published by Hornik in 1989).

'Distribution-free' classifiers

ID3/C4.5 decision trees, CART, the histogram classifier, the multinomial classifier - these classifiers do not apply any distance measure. They are so-called nonparametric in their way of working. This having said, they are based on count distributions - hence the binomial distribution and the multinomial distribution, and nonparametric classifiers are governed by the statistics of these distributions. However, as the only thing that matters is whether the observed value of an input variable occurs in a specific bin/interval or not, they are by nature insensitive to extreme observations. This holds when the intervals of input variable bins to the leftmost and rightmost side are open. So these classifiers are certainly model robust.

Noise characteristics and outliers

Extreme values are one kind of noise. A scatter around a zero mean is the most common kind of noise that occurs in practice.

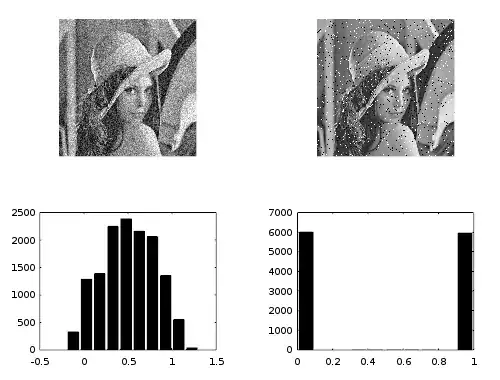

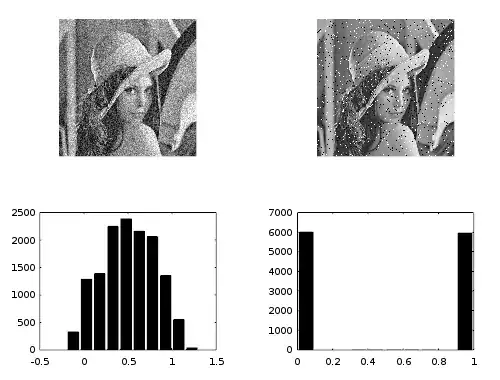

This image illustrates scatter noise (left) and salt-and-pepper noise (right). Your robustness questions relate to the right-hand kind of noise.

Analysis

We can combine the true value of classifier input $i$, $z(i)$ with scatter noise $\epsilon$, and an outlier offset $e$ as

$

x(i) = z(i) + \epsilon + e \cdot \delta(\alpha)

$

with $\delta(\alpha)$ the Kronecker delta function governed by the parameter $\alpha$. The parametrized delta-function determines whether the outlier offset is being added, or not. The probability $P(\delta(\alpha)=1) \ll 1$, whereas the zero-mean scatter is always present. If for example $P(\delta(\alpha)=1) = \frac{1}{2}$, we do not speak of outliers anymore - they become common noise additive offsets. Note also that distance is intrinsic to the definition of the concept outlier. The observed class labels themselves in a training set cannot be subject to outliers, as follows from the required notion of distance.

Distance based classifiers generally use the L2-norm $\mid \mid {\bf x} \mid \mid_2$ to calculate degree of fit. This norm is well-chosen for scatter noise. When it comes to extreme values (outliers), their influence increases with the power of $2$, and of course with $P(\delta(\alpha)=1)$. As nonparametric classifiers use different criteria to select the optimal set of parameters, they are insensitive to extreme value noise like salt-and-pepper.

Again, the type of classifier determines the robustness to outliers.

Overfitting

The issue with overfitting occurs when classifiers become 'too rich' in parameters. In that situation learning triggers that all kinds of small loops around wrongly labeled cases in the training set are being made. Once the classifier is applied to a (new) test set, a poor model performance is seen. Such overgeneralization loops tend to include points pushed just across class boundaries by scatter noise $\epsilon$. It is highly unlikely that an outlier value, which has no similar neighboring points, is included in such a loop. This because of the locally rigid nature of (distance-based) classifiers - and because closely grouped points can push or pull a decision boundary, which one observation in its own cannot do.

Overfitting generally happens between classes because the decision boundaries of any given classifier become too flexible. Decision boundaries are generally drawn in more crowded parts of the input variable space - not in the vicinity of lonely outliers per se.

Having analyzed robustness for distance based and nonparametric classifiers, a relation can be made with the possibility of overfitting. Model robustness to extreme observations is expected to be better for nonparametric classifiers than for distance-based classifiers. There is a risk of overfitting because of extreme observations in distance-based classifiers, whereas that is hardly the case for (robust) nonparametric classifiers.

For distance-based classifiers, outliers will either pull or push the decision boundaries, see the discussion of noise characteristics above. Discriminant analysis, for example, is prone to non-normally distributed data - to data with extreme observations. Neural networks can just end up in saturation, close to $0$ or $1$ (for sigmoid activation functions). Also support vector machines with sigmoid functions are less sensitive to extreme values, but they still employ a (local) distance measure.

The most robust classifiers with respect to outliers are the nonparametric ones - decision trees, the histogram classifier and the multinomial classifier.

A final note on overfitting

Applying ID3 for building a decision tree will overgeneralize model building if there is no stopping criterion. The deeper subtrees from ID3 will begin fitting the training data - the fewer the observations in a subtree the higher the chance of overfitting. Restricting the parameter space prevents overgeneralization.

Overgeneralization is in distance based classifiers also prevented by restricting the parameter space, i.e. the number of hidden nodes/layers or the regularization parameter $C$ in an SVM.

Answers to your questions

So the answer to your first question is generally no. Robustness to outliers is orthogonal to whether a type of classifier is prone to overfitting. The exception to this conclusion is if an outlier lies 'lightyears' away and it completely dominates the distance function. In that really rare case, robustness will deteriorate by that extreme observation.

As to your second question.

Classifiers with well-restricted parameter spaces tend to generalize better from their training set to a test set. The fraction of extreme observations in the training set determines whether the distance based classifiers be led astray during training. For non-parametric classifiers, the fraction of extreme observations can be much larger before model performance begins to decay. Hence, nonparametric classifiers are much more robust to outliers.

Also for your second question, it's the underlying assumptions of a classifier that determine whether it's sensitive to outliers - not how strongly its parameter space is regularized. It remains a power-struggle between classifier flexibility whether one lonely outlier 'lightyears away' can chiefly determine the distance function used during training. Hence, I argue a generally 'no' to your second question.