I'm working with data that is multi-modal, I need to be able to check if the individual samples are statistically distinct or not, so I'm running KS-test against pairs of samples.

But I've noticed that p-values below 0.05 were showing up less often than expected with samples that should be similar.

So I've ran a simulation with a simple bimodal distribution:

n <- 10000

nsamp <- 10000

ps <- replicate(nsamp, {

y1 <- c(rnorm(n/2), rnorm(n/2, 5, 2))

y2 <- c(rnorm(n/2), rnorm(n/2, 5, 2))

tt <- ks.test(y1, y2)

tt$p.value

})

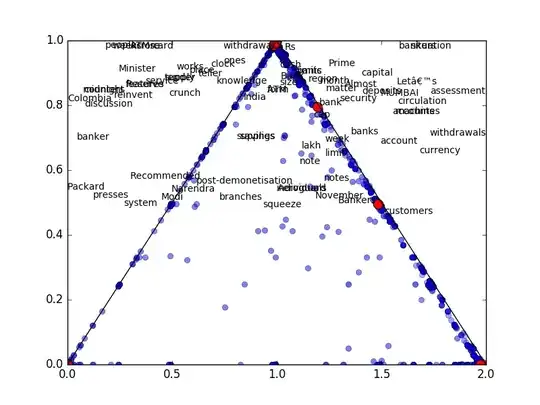

plot(ecdf(ps))

ks.test(ps, 'punif')

plot(ecdf(runif(100000)), add=T, col="red")

plot(ecdf(rbeta(100000, 2, 1)), add=T, col="blue")

To my surprise, the p-values are not uniformly distributed, rather they follow a distribution similar to beta distribution with parameters alpha=2 and beta=1.

Question 1 Do I interpret it correctly that KS-test is more sensitive to departures from expected values in multi-modal distributions than in unimodal distributions? i.e. normally distributed samples are worst case scenario for KS-test?

Question 2 Should I rather perform a test that the p-values are stochastically greater than uniformly distributed, not that they are uniformly distributed (i.e. something like ks.test(ps, 'punif', alternative='greater'))?

Edit 1: removed sample() from functions.

Edit 2:

While in the example above I'm using a simple concatenation to add the observations from two different distributions, I do have a reason to believe this is correct approach to model the real-world observations.

The data in question comes from few different experiments, the values in question are reaction times. Now, because the reaction time is in the order of 100µs while I'm interested in differences down to few ns, I need to collect a lot of observations. To reduce bias from running the experiments in exact same order (say ABC ABC ABC ABC, etc. with A, B and C being individual test classes) I'm randomising the order in which I run them, but I still run them in groups (e.g. ABC CBA BAC CAB, etc.).

Now, because I run hundreds of thousands of tests, it takes time.

If I have a noise that is active for a continuos period of time but only for part of the time it takes to run the test, then the actual collected data will look like a concatenation of two distributions, not a random selection from two distributions. So I think I'm correct to model it through c(rnorm(), rnorm()) rather than ifelse(binom(), rnorm(), rnormo()).