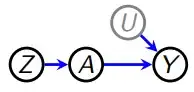

Someone suggested the following idea to me to control for reverse causality. Suppose we want to test for the effect of $X$ on $Y$ in a panel data set, but we suspect that there is reverse causality. That is, past values of $Y$ may cause variation in $X$, too.

The suggestion goes as follows: In order to remove potential reverse causality between independent variable $X_t$ and dependent variable $Y_t$, we could run a first stage regression of the second lag of $y$ on the first lag of $x$,

$$x_{t-1}=\alpha + \beta y_{t-2} + e_{t-1}$$

and then use the residuals of that regression $e_{t-1}$ as the independent variable in our main model

$$y_t = \beta_0 + e_{t-1} + z_{t-1}$$

Here, $e_{t-1}$ would thus represent the part of $x_{t-1}$ that is not explained by preceding values of $y$. This method should therefore effectively remove the reverse causality in the model.

The proposition makes intuitive sense to me at least. However, I have not seen it proposed or applied anywhere before, the common remedy for reverse causality being 1) lagging independent variables, and b) using IVs. Though I admit that I am perhaps not skilled enough an econometrician to give an adequate response here. I was therefore hoping the community could weigh in on the question. Does this method seem viable to you as a control for reverse causality, or have you seen it (or something similar) applied somewhere before?