I have seen quite a few people create Neural Networks to predict stock prices, so I decided to try my hand at it. I am using an LSTM network, and have had decent results. But I am worried about overfitting. I have tried to limit this as much as I know how, but I am still not sure if it is an issue in my current network.

'''

Model_Input = Input(shape=(5, 1), name='Model_Input')

x = LSTM(50, name='Model_0')(Model_Input)

x = Dropout(0.5, name='Model_dropout_0')(x)

x = Dense(64, name='Dense_0')(x)

x = Activation('sigmoid', name='Sigmoid_0')(x)

x = Dense(1, name='Dense_1')(x)

x = Dense(1, kernel_initializer='lecun_normal', activation='linear')(x)

Model_Output = Activation('linear', name='Linear_output')(x)

model = Model(inputs=Model_Input, outputs=Model_Output)

adam = optimizers.Adam(lr=0.0005)

model.compile(optimizer=adam, loss='mse')

# Gives the input data a new shape of (1259, 5, 1)

Normalized_Input_Data = np.reshape(Normalized_Input_Data, Normalized_Input_Data.shape + (1,))

# Extract just the closing price from the data (open, high, low, close, and volume are contained)

Normalized_Closing_Price = Normalized_Input_Data[:,3]

# Train neural network

history = model.fit(x=Normalized_Input_Data, y=Normalized_Closing_Price, batch_size=10, epochs=500,

shuffle=True, validation_split=0.25)

'''

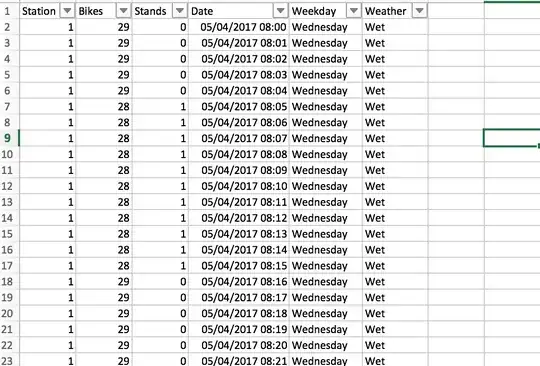

The input data is a little over the past 5 years worth of stock data. I only get the data daily. At the time of writing this, I think the network sees 1273 days worth of data instead of 1259. I am using 25% of the data for validation. I figured this would be enough, but it may not be. The below images are for the Nvidia stock.

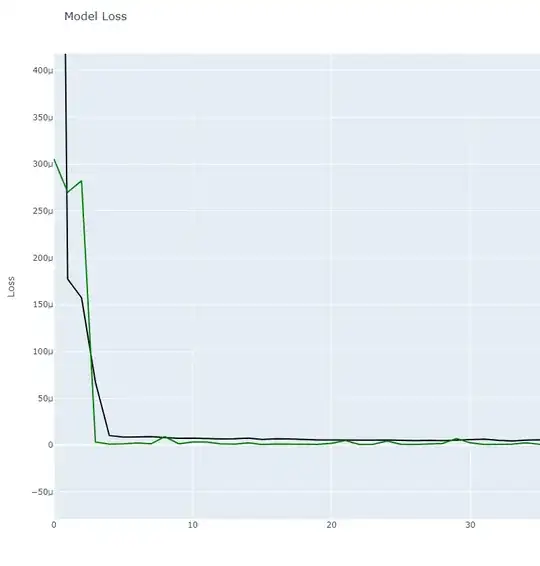

So over the first 5-6 epochs the loss changes drastically, after it seems to level out and stay pretty consistent. I am not sure if the loss is even enough to worry about, or if there is a way to make it better. Also any changes or suggestions for the network architecture are welcomed.

EDITED

So after changing the training and validation data, changed the model architecture to keep it from overfitting (as much as I could anyway).

'''

# Concluded that the first dropout layer(s) needed to be more harsh than

# the later ones

Model_Input = Input(shape=(5, 1), name='Model_Input')

x = LSTM(64, name='Model_0')(Model_Input)

x = Dropout(0.5, name='Model_dropout_0')(x)

x = Dense(64, name='Dense_0')(x)

x = Dropout(0.1, name='Model_dropout_1')(x)

x = Activation('sigmoid', name='Sigmoid_0')(x)

x = Dense(1, name='Dense_1')(x)

Model_Output = Activation('linear', name='Linear_output')(x)

model = Model(inputs=Model_Input, outputs=Model_Output)

adam = optimizers.Adam(lr=0.0005)

model.compile(optimizer=adam, loss='mse')

# Implemented early stopping to keep the epochs from creating overfitting

Early_Stopping = EarlyStopping(monitor='loss', mode='min', patience=50, verbose=1)

history = model.fit(x=History_Input_Data, y=History_Closing_Price, batch_size=128, epochs=750, shuffle=False, validation_data=(Validation_Input_Data, Validation_Closing_Price), callbacks=[Early_Stopping])

'''

For those who might need it, I split the input data up before feeding it to the model.

'''

# Split the dataset up into training and validation datasets

# Use the first 75% of data to train on (This is the oldest data)

# Use the last 25% of data to validate on (This is the most current data)

Length_Of_Data = len(Normalized_Input_Data)

Validation_Length = int(Length_Of_Data * 0.25)

History_Length = (Length_Of_Data - Validation_Length)

# 956 days worth of data for network to train on

History_Data = Normalized_Input_Data[:History_Length]

# 318 days worth of data (most current dates) to be validated on

Validation_Data = Normalized_Input_Data[History_Length:]

'''

Updated graphs for the prices and loss (For the validation dataset).

After about 6 epochs, the model loss seems to even out for both training loss and validation loss. However, this is just for Nvidia's stock. Different stocks give different results. The early stopping was an attempt to try and keep the accuracy similar between multiple stocks.