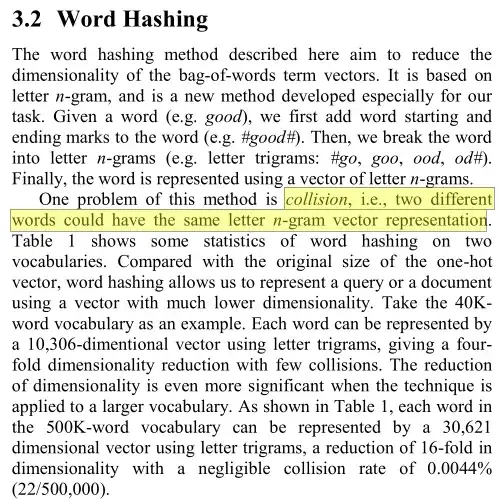

Apparently they did found 22 such collisions in their data.

What they do, is they first first divide words into $n$-grams and then one-hot encode into vector. This is not described in the paper explicitly, but may be guessed from the context, that each position in the vector is occurrence (coded as one), or absence (coded as zero), of the particular $n$-gram in the word. That is the reason why they observed $10,306$ unique vectors for $40\text{k}$ word set and $30,621$ for $500\text{k}$ word set. Notice that $30,621^{1/3} = 31.28$ and $10,306^{1/3} = 21.76$ (for three-grams), where number of possible three-grams build from the set of the Latin characters, -, and #, is $28^3=21,952$, while non-standard characters like æ, or ö, may also appear, so the length of the vectors is the number of unique $n$-grams observed in the data. Of course, language is not build by combining letters in random combinations, so not all combinations will appear, or will be equally popular, hence the larger collection of words, the more tokens we'll observe.

What this also means is that neither order, nor number of times $n$-grams appear is accounted for. So for example, "aaa" and "aaaa" both contain only the #aa, aaa, aa# 3-grams, so both would be encoded as the same vector. As you can see from the paper, such cases are very rare, so it would be hard to come up with a more realistic example, at least no such example immediately comes to my mind. I skimmed through the paper, but didn't find what was the data that they used, but you could always preprocess the data and check the duplicates by hand to verify what they were.

Still, tl;dr is that collisions should be a rare case for human language. Of course, this does not have to be the case for all sequences. For example, if you encoded DNA sequences like this, I'd imagine there would be a lot of collisions, since they consist of only four nucleobases ( A, G, C, and T), so there is a much smaller number of possible $n$-grams among them.