The thing is, I have tons of 1d-data that is distributed around multiple different mean points, I'm searching for a general way of identifying this little clusters and somehow spreading them.

I've implemented KDE distributions fits for this. My first idea was to search for some way of finding local maxima of the KDE distribution, but that would not be very effective, because it would be depending of the bandwidth of the distribution fixed.

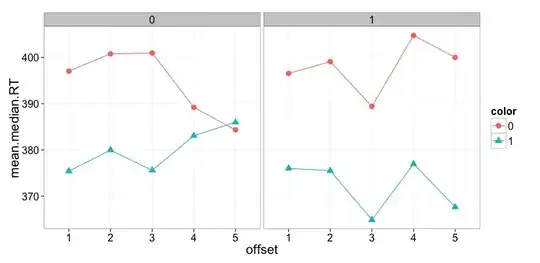

Here is one example.

I'm looking for a general method, that would give me in this example the mean and standard deviation of this three clusters (but it could be more or less than three), considering only themselves, using maybe scipy.stat or sklearn.

Thank you !

Update: Gaussian Mixture might work really well for me, the main problem is just the shape of the of the fitting data, it is a matter of implementation.

from sklearn.mixture import GaussianMixture

from scipy.stats import norm

import numpy as np

mean=34

std=10

xpdf=np.linspace(20,50,1000).reshape(-1,1)

y=norm.pdf(xpdf,mean,std)

y=np.array(y).reshape(-1,1)

model=GaussianMixture(1).fit(y)

ypdf=np.exp(model.score_samples(xpdf))

plt.hist(y,bins=100,density=True)

plt.plot(xpdf,ypdf,'-r')

plt.show()

```