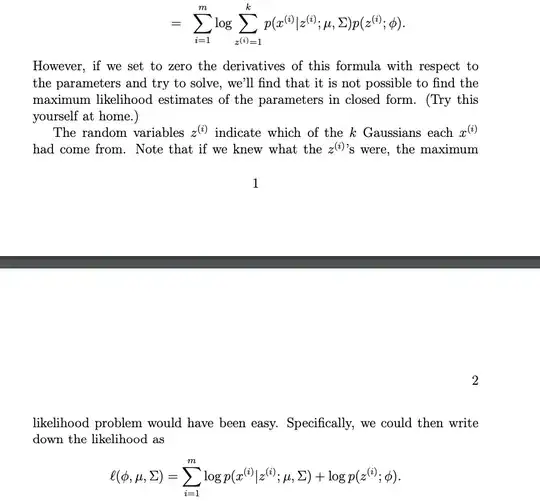

I saw the following notes from CS229 (screenshotted below). I am confused how the two equations are equivalent. How were they able to distribute the $log$ inside the summation? I don't see how knowing the hidden variable $z$, allows us to write it this way.

-

@Xi'an Sorry about that. It is the popular Machine Learning class at Stanford. http://cs229.stanford.edu/ – David Jun 04 '20 at 16:50

2 Answers

The meaning of $z^{(i)}$ in the two equations differs. In the observed log-likelihood $$\ell^o(\theta|\mathbf x)=\sum_{i=1}^m \log \sum_{z^{(i)}=1}^k p(z^{(i)}|\theta) p(x^{(i)}|z^{(i)},\theta)$$ $z^{(i)}$ is an index and the above could be written $$\ell^o(\theta|\mathbf x)=\sum_{i=1}^m \log \sum_{j=1}^k p(j|\theta) p(x^{(i)}|j,\theta)$$ In the completed log-likelihood $$\ell^c(\theta|\mathbf x,\mathbf z)=\sum_{i=1}^m \log p(z^{(i)}|\theta) p(x^{(i)}|z^{(i)},\theta)$$ $z^{(i)}$ is a latent variable that indicates the provenance component of $x^{(i)}$.

The completed log-likelihood $\ell^c(\theta|\mathbf x,\mathbf z)$ is the log-likelihood associated with the joint distribution of the random sample $(\mathbf x,\mathbf z)$. The observed log-likelihood $\ell^c(\theta|\mathbf x)$ is the log-likelihood associated with the marginal distribution of the random sample $\mathbf x$.

- 90,397

- 9

- 157

- 575

-

Wait, I'm a bit confused by the terminology. In http://cs229.stanford.edu/notes/cs229-notes7b.pdf, it appears that $z^{(i)}$ is referred to as "latent" variable in what you refer to as the "observed" log likelihood. In the observed log likelihood, isn't $z^{(i)}$ hidden/unobserved, and in the completed log-likelihood, it is a known (so no longer latent)? – David Jun 04 '20 at 16:56

-

The latent variable remains latent in both formulas, but for the "completed" likelihood it is conditioned upon while in the "observed" likelihood it is integrated out, hence the sum inside the log. For instance, the EM algorithm makes use of the "completed" likelihood by replacing the unobserved latent variable with iterated versions of their expectation. – Xi'an Jun 04 '20 at 17:18

-

Is there a difference between "latent" and "unobserved?" For the completed likelihood, I was under the impression that $z^{(i)}$ is now known, which I thought means it's no longer latent? – David Jun 04 '20 at 17:24

-

No, "latent" is part of the terminology in this part of the literature, but it is the same as "unobserved", "auxiliary", "demarginalised", &tc. It starts from one (among many) representation of the likelihood as$$p(\mathbf x|\theta)=\int p(\mathbf x,\mathbf z|\theta)\,\text{d}\theta$$ – Xi'an Jun 04 '20 at 17:27

-

@Xi'an I just took a look at the notes the OP linked. It appears that the completed log likelihood is written based on assuming that $z^{(i)}$ is known (see bottom of page 1 in http://cs229.stanford.edu/notes/cs229-notes7b.pdf). So I believe they are no longer latent in the completed log likelihood, and that is why we are able to write the likelihood as a joint distribution of $z$ and $x$. – 24n8 Jun 04 '20 at 17:35

-

Yes this is a joint density but, again when looking at the EM algorithm, the observed data remains $\mathbf x$ and only $\mathbf x$. – Xi'an Jun 04 '20 at 17:56

-

Right, although my understanding is in the "E-Step," a value for $z$ is guessed, and this guessed value is used in the M-Step. So I think $z$ is known/observed for the purpose of the the M-step. – 24n8 Jun 04 '20 at 18:04

-

Somewhat related, but why for linear regression, the likelihood is written in terms of the conditional distribution $p(y|x)$ instead of the joint distribution $p(x,y)$? – David Jun 04 '20 at 18:18

-

This is another and unrelated question. [See e.g. this answer](https://stats.stackexchange.com/q/144826/7224). One reason is that the distribution of $X$ is irrelevant. Another is that, _given_ a new value of $x$, one wants to predict the associated value of $y$. – Xi'an Jun 04 '20 at 19:15

The summation cannot be distributed but the product can. If the $z^{(i)}$ value is unknown, the variable inside the log is the summation over $z^{(i)}$. But when the value is known, it reduces to one term, which is just the product of the two probabilities.

To understand why it reduces to the one term, you need to think from a different point of view. The original log-likelihood is derived with the assumption that we don't know the index of the Gaussian in the mixture the observation comes from. This is an ignorant observer's point of view. The observer didn't see the drawing process of the sample and he/she only can guess probabilistically from what class the observation came.

However, there is a different point of view. If you are actually the person draw the sample, you perfectly know the origin of the observation. So in this case, you will have only one index of mixture contributing to the observation and the summation reduces to the one term. The EM algorithm goes with this view but it assumes we don't know the parameters of the distribution either and tries to decide the parameters in a recursive way.

- 787

- 1

- 5

- 23

-

Sorry, I don't quite understand what you mean by "But when the value is known, it reduces to one term." How does it reduce to 1 term? – David Jun 04 '20 at 02:00

-

@David Assume the ideal world which we know from whose mixture the observation comes from. In this case, we know that only one of Gaussian in the mixture matters. – hbadger19042 Jun 04 '20 at 07:39