I often read that training "overparameterized" networks works well in practice, and perhaps no one yet knows exactly why yet. However, when I look at the number of samples and parameters many NNs use, they are still fitting with more data than parameters.

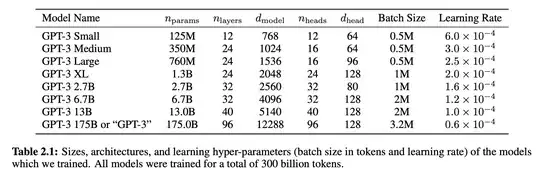

Consider for example the recently announced GPT-3 language model with as many as 175 Billion parameters. They never even tried fitting a model with more parameters than tokens (300 billion tokens).

Would one consider this neural net overparameterized?

If so, what's the criteria, heuristic or rule of thumb if you with that would merit that designation for a model? Is it, for example:

- the ratio of the # of model parameters $p$ and data points $n$

- the fact that a model interpolates the training data (the model achieves a training loss of 0)

- all / any of the above

- any other measures?