I wasn't able to find any satisfying answer about that topic. I hope someone who understand correctly the subject could enlighten this shadow.

This is not very important, just for the sake of notations: In the following we work with exponential families: $$ \exp \{ \frac {y \theta - b( \theta ) } { a(\phi)} + c(y,\phi) \} $$ the deviance is defined as the difference in likelihood between the model and the saturated model: $$D = 2 \phi \left ( l(y, \phi , y ) - l ( \hat{\mu}, \phi, y ) \right ) $$ the scaled deviance as : $$ D^* = \frac D {\phi} $$

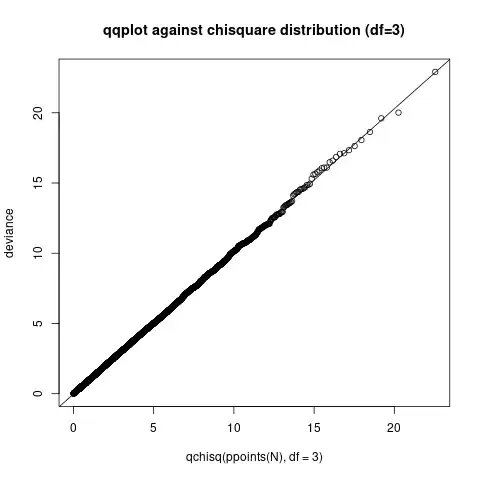

When one compares two nested models, in particular we are trying to check the goodness of fit, and we are trying to test the null hypothesis (smaller model is doing a better approximation), we can compute $D_0^* - D_1^* $ where $D_0^*$ is the scaled deviance of the smaller model. This, should be approximately, be following a $ \chi^2 $ distribution, of the difference in number of parameters.

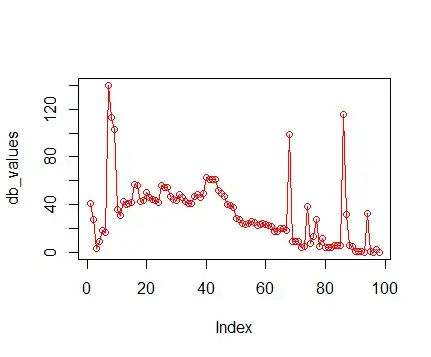

My question is the following, how to test whether that approximation is good ? My professor is not very precise on that topic, he mentions doing "simulations". What does it mean please?