1.a Related to the Variance/Bias trade off.

Bias / variance tradeoff math

You could see the regularization as a form of shrinking the parameters.

When you are fitting a model to data then the you need to consider that your data (and your resulting estimates) are made/generated from two components:

$$ \text{data $=$ deterministic part $+$ noise }$$

Your estimates are not only fitting the deterministic part (which is the part that we wish to capture with the parameters) but also the noise.

The fitting to the noise (which is overfitting, because we should not capture the noise with our estimate of the model, as this can not be generalized, has no external validity) is something that we wish to reduce.

By using regularization, by shrinking the parameters, we reduce the sample variance of the estimates, and it will reduce the tendency to fit the random noise. So that is a good thing.

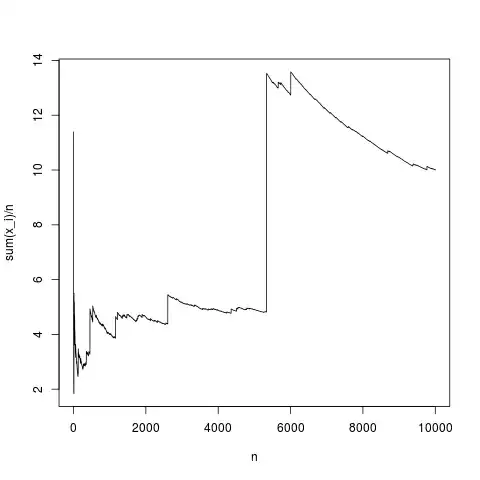

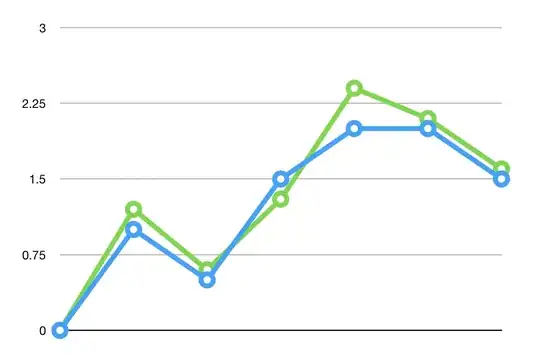

At the same time the shrinking will also introduce bias, but we can find some optimal amount based on some computations with prior knowledge or based on data and cross validation. In the graph below, from my answer to the previously mentioned question, you can see how it works for a single parameter model (estimate of the mean only), but it will work similarly for a linear model.

1.b On average, shrinking the coefficients, when done in the right amount, will lead to a net smaller error.

Intuition: sometimes your estimate is too high (in which case shrinking improves), sometimes your estimate too low (in which case shrinking makes it worse).

Note that shrinking the parameter does not equaly influence those errors... we are not shifting the biased parameter estimate by some same distance independent from the value of the unbiased estimate (in which case there would be indeed no net improvement with the bias)

We are shifting with a factor that is larger if the estimate is larger away from zero. The result is that the improvement when we overestimated the parameter is larger than the detoriation when underestimated the parameter. So we are able to make the improvements larger than the detoriations and the net profit/loss will be positive

In formula's: The distribution of some non-biased parameter estimate might be some normal distribution say:$$\hat\beta\sim\mathcal{N}(\beta, \epsilon_{\hat\beta}^2)$$

and for a shrunken (biased) parameter estimate is

$$c\hat\beta \sim \mathcal{N}(c\beta, c^2\epsilon_{\hat\beta}^2)$$

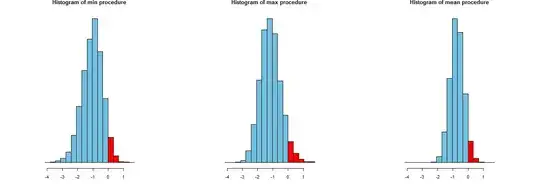

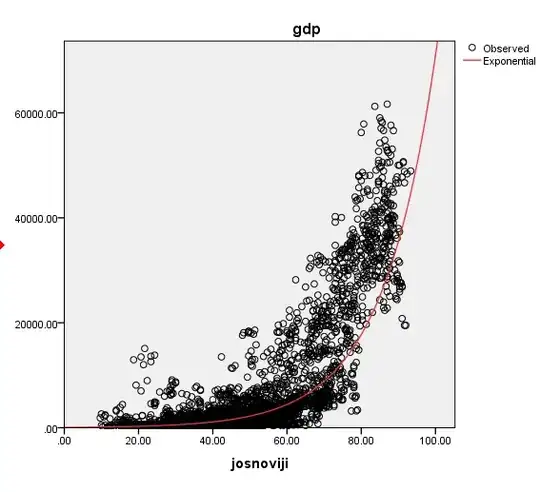

These are the curves in the left image. The black one is for the non-biased where $c=1$. The mean total error of the parameter estimate, a sum of bias and variance, is then

$$E[(c\hat\beta-\beta)^2]=\underbrace{(\beta-c\beta)^2 }_{\text{bias of $\hat\beta$}}+\underbrace{ c^2 \epsilon_{c\hat\beta}^2}_{\text{variance of $c\hat\beta$}}$$with derivative

$$\frac{\partial}{\partial c} E[(c\hat\beta-\beta)^2]=-2\hat\beta(\beta-c\beta)+2 c\epsilon_{c\hat\beta}^2$$

which is positive for $c=1$ which means that $c=1$ is not an optimum and that reducing $c$ when $c=1$ leads to a smaller total error. The variance term will relatively decrease more than the bias term increases (and in fact for $c=1$ the bias term does not decrease, the derivative is zero)

2. Related to prior knowledge and a Bayesian estimate

You can see the regularization as the prior knowledge that the coefficients must not be too large. (and there must be some questions around here where it is demonstrated that regularization is equal to a particular prior)

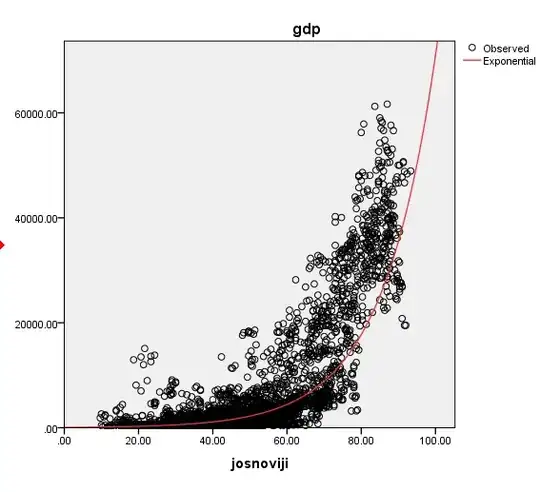

This prior is especially useful in a setting where you are fitting with a large amount of regressors, for which you can reasonably know that many are redundant, and for which you can know that most coefficients should be equal to zero or close to zero.

(So this fitting with a lot of redundant parameters goes a bit further than your two parameter model. For the two parameters the regularization doesn't, at first sight, seem so, useful and in that case the profit by applying a prior that places the parameters closer to zero is only a small advantage)

If you are applying the right prior information then your predictions will be better. This you can see in this question Are there any examples where Bayesian credible intervals are obviously inferior to frequentist confidence intervals

In my answer to that question I write:

The credible interval makes an improvement by including information about the marginal distribution of $\theta$ and in this way it will be able to make smaller intervals without giving up on the average coverage which is still $\alpha \%$. (But it becomes less reliable/fails when the additional assumption, about the prior, is not true)

In the example the credible interval is smaller by a factor $c = \frac{\tau^2}{\tau^2+1}$ and the improvement of the coverage, albeit the smaller intervals, is achieved by shifting the intervals a bit towards $\theta = 0$, which has a larger probability of occurring (which is where the prior density concentrates).

By applying a prior, you will be able to make better estimates (the credible interval is smaller than the confidence interval, which does not use the prior information). But.... it requires that the prior/bias is correct or otherwise the biased predictions with the credible interval will be more often wrong.

Luckily, it is not unreasonable to expect a priori that the coefficients will have some finite maximum boundary, and shrinking them to zero is not a bad idea (shrinking them to something else than zero might be even better and requires appropriate transformation of your data, e.g. centering beforehand). How much you shrink can be found out with cross validation or objective Bayesian estimation (to be honest I do not know so much about objective Bayesian methods, could somebody maybe confirm that regularization is actually in some sort of sense comparable to objective Bayesian estimation?).