I understand that in order to improve your generative model performance it is quite useful to compare your output and the target in the feature space, as stated in the paper Perceptual Losses for Real-Time Style Transfer and Super-Resolution, in which is used for style transfer and super resolution generative models:

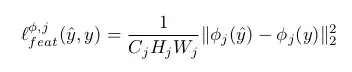

My question is regarding the support network layers selection to use in the loss calculation (In the above example a VGG16 net is used). As seen in the following component of the loss:

in which is calculated the L2 loss of the target vs our output in the feature space of the layer j.

In many examples a set of these layers are selected to be added in the loss function calculation. My questions are:

How do I select these layers?

Should I use them as hyperparameters to tune in a validation set?

Should I select them using some different criteria (for example knowing which kind of features the layer represent)?