I am minimizing a negative log likelihood for OLS using multiple predictors (the X matrix is generated using a D-optimal design).The standard errors should be the square root of the diagional elements from the inverse of the Hessian. See for example this post or this explanation.

To check my implementation, I performed a simple regression and compared the results with those from an OLS fit using statsmodels

To my surprise the standard errors calculated using MLE are consistently (about 2 times) lower compared to the standard errors determined by OLS for all predictors although the predicted values are the same. I thought for linear regression, OLS and MLE should be equivalent.

Do you have any ideas why this could be the case?

I am using a truncated Newton Algorithm (scipy.minimize) for optimization with bounds on the standard deviation. I thought that maybe because I am summing the negative log likelihoods and returning a scalar value to the optimizer might yield an average likelihood rather than the likelihood for each individual sample.

Might be other approaches to calculate confidence intervals more appropriate? Maybe something like an F test? E.g. holding all other parameters constant and just varying one and check how much the parameter can be varied until the change in y cannot be explained by noise at the given confidence level? Or bootstrapping as last resort?

EDIT:

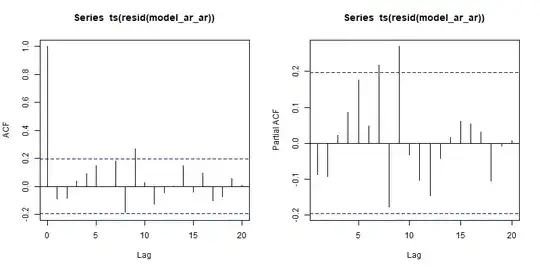

It seems, that the deviation between CIs estimated via the Hessian for MLE and CIs for OLS is dependend on the number of samples: