I know that I can use doc2Wec and other resources to get sentence vectors. But I am very curious to generate sentence vectors using Word2Vec.

I read lot of materials and found that Averaging the embeddings is the baseline architecture but it is not clear on what axis we have to perform the averaging. Even though 'Averaging Word Vectors' clearly mentions that I have to average out the embeddings of every vector i feel this will have disadvantage that my sentence vectors will be of unequal lengths. Consider below example.

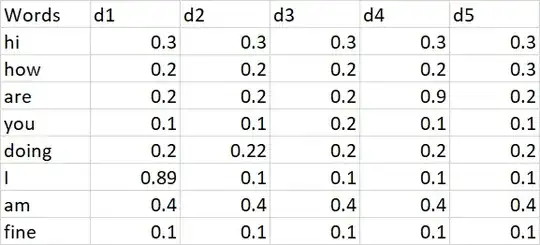

Here every word is represented in 5 dimensions. Now lets try to generate sentence vector for the sentence

'how are you' and 'fine'

My 'how are you' sentence will be of shape 1 row and 3 columns(3 words in this sentence)

'fine' sentnece will be of shape 1 row and 1 column..

Now we can see that both the sentences are of unequal length.. So I will have to append 0s to shorter sentence to match the length. This will be adding redundancy to my data. So what are the best ways to get the vectors of equal length without using doc2vec and avoiding zero padding.