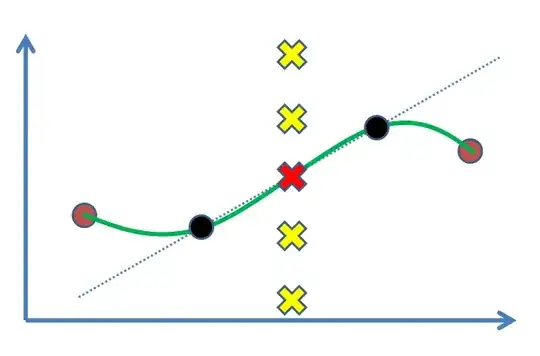

For a logistic regression classifier, I create a roc curve by variation of the threshold on the output probability.

Question: can I create an additional ROC curve with 5% rejection rate based on the classification probability, by rejection of the samples closest to the threshold? This means that every point on the ROC will be based on a different not rejected samples. If yes, where I can find a reference paper about it? If no, what is the proper procedure, and where I can read about it?

Lately, someone suggested that instead of rejecting by 5% of the testing set, I should reject by a threshold which is extracted for 5% of the training set. I am not sure that the difference is important but if it is a standard procedure, I would be happy to find a reference of it.