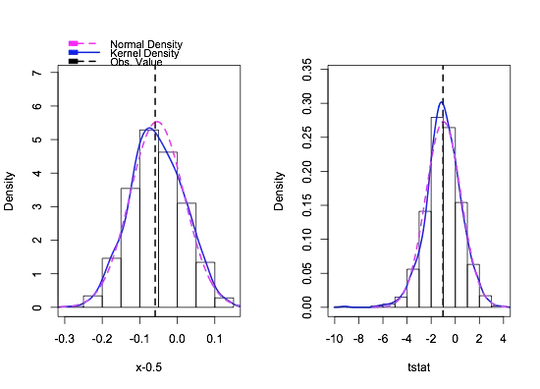

If a bootstrap confidence interval (CI) can be interpreted as a standard CI (e.g., the range of null hypothesis values that cannot be rejected) [also stated in this post]. Is it ok to derive a p-value from a bootstrap distribution like this? When the null hypothesis is $H_0: \theta=\theta_0$ and a bootstrap ($1-\alpha$)$\times 100\%$ CI is ($\theta_L$, $\theta_U$)$_{\alpha}$. The p-value is $\alpha$ corresponding with $\theta_U=\theta_0$ or $\theta_L=\theta_0$.

This post also describes examples of converting CIs to p-values, but I do not completely understand...

The following code derives a p-value from the percentile CI of the slope parameter of a linear regression model, and it can be applied to other types of CIs. If this is not ok, what is the appropriate way to compute a p-value, e.g., associated with the percentile CI? If the code below is ok, can it be described as a bootstrap hypothesis test (e.g., when describing it in a paper)?

# hypothestical data

x <- runif(20,10,50)

y <- rnorm(length(x),1+0.5*x,2)

model <- lm(y~x)

plot(x,y)

abline(model)

params <- coef(model)

nboot <- 2000

eboot <- rep(NA,nboot)

for(i in 1:nboot){

booti <- sample(1:length(x),replace=T)

eboot[i] <- coef(lm(y[booti]~x[booti]))[2]

}

# 95% CI for the slope

quantile(eboot,c(0.025,0.975)) # percentile CI

params[2]*2-quantile(eboot,c(0.975,0.025)) # basic CI

# null hypothesis

null <- 0.5

get.p <- function(x,null){

if(null>quantile(eboot,0.5)) return(null-quantile(eboot,1-x/2))

if(null<quantile(eboot,0.5)) return(null-quantile(eboot,x/2))

}

#x <- seq(0,2,length=100)

#plot(x,get.p(x,null),type="l")

(p <- uniroot(get.p,null=null,c(0,1))$root) # p-value

#abline(v=p,h=0)