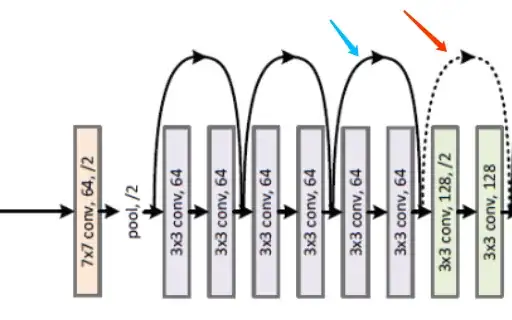

It's best to understand the model in terms of individual "Residual" blocks that stack up and result in the entire architecture. As you would have probably noticed, the dotted connections only come up at a few places where there is an increase in the depth (number of channels and not the spatial dimensions). In this case, the first dotted arrow of the network presents the case where the depth is increased from 64 to 128 channels by 1x1 convolution.

Consider equation (2) of the ResNet paper:

$$

y = F(\textbf{x}, \{W_i\}) + W_s \textbf{x}

$$

This is used when the dimensions of the mapping function $F$ and the identity function $\textbf{x}$ do not match. The way this is solved is by introducing a linear projection $W_s$. Particularly, as described in page 4 of the Resnet paper, the projection approach means that 1x1 convolutions are performed such that the spatial dimensions remain the size but the number of channels can be increased/decreased (thereby, affecting the depth). See more about 1x1 convolutions and their use here. However, another method of matching the dimensions without having an increase in the number of parameters across the skip connections is to use what is the padding approach. Here, the input is first downsampled by using 1x1 pooling with a stride 2 and then padded with zero channels to increase the depth.

Here is what the paper precisely mentions:

When the dimensions increase (dotted line shortcuts

in Fig. 3), we consider two options: (A) The shortcut still

performs identity mapping, with extra zero entries padded

for increasing dimensions. This option introduces no extra

parameter; (B) The projection shortcut in Eqn.(2) is used to

match dimensions (done by 1×1 convolutions).

Here are some more references, in case needed - a Reddit thread, another SE question on similar lines.