The notion of information as per Shannon is that if the probability of RV is close to 1, there is little information in that RV because we are more certain about the outcome of the RV so there is little information that RV can provide us.

Contrasting this to Fisher information which is the inverse of the covariance matrix, so by that definition if the variance is high meaning the uncertainty is high we have little information and when uncertainty is low (probability of RV close to 1) the information is high.

The two notion of information is conflicting and I would like to know if I understood it wrong?

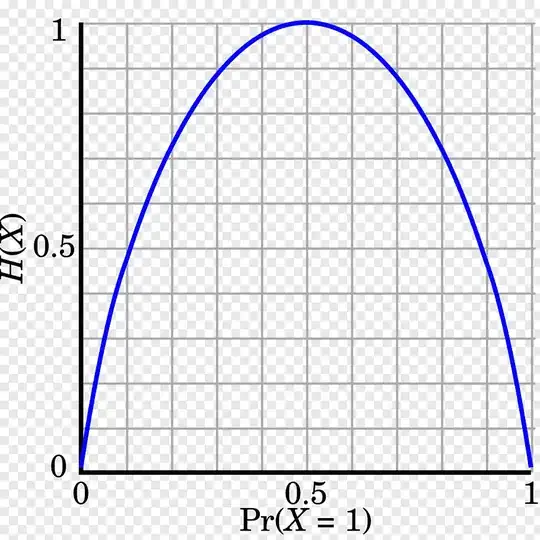

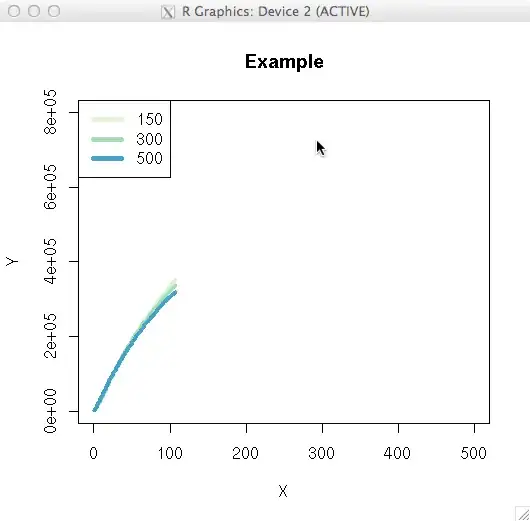

From one of the references provided by @doubllle the following plot shows what Shannon entropy is for the coin flip model parametrized by $\theta$ of Bernoulli distribution Vs the same for Fisher information