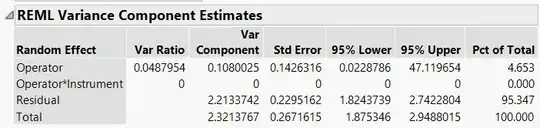

Consider a crossed design with 3 operators and 4 instruments. Interest is in the instrument effect.

Could you explain me the difference in standard errors of the fixed effects between the two seemingly equivalent models below?

Model 1

- fixed effect: instrument

- random effect: operator, operator*instrument

Model 2

- fixed effect: instrument

- random effect: operator*instrument

Here is the data is

Operator Part Instrument new Y

Janet 1 1 9.110079

Janet 1 2 8.142277

Janet 1 3 11.26354

Janet 1 4 9.919439

Janet 2 1 11.74011

Janet 2 2 8.377658

Janet 2 3 9.942735

Janet 2 4 10.40425

Janet 3 1 10.20466

Janet 3 2 8.379429

Janet 3 3 12.22024

Janet 3 4 12.61237

Janet 4 1 8.807384

Janet 4 2 8.030572

Janet 4 3 13.08309

Janet 4 4 10.01584

Janet 5 1 8.099196

Janet 5 2 8.364157

Janet 5 3 13.41016

Janet 5 4 14.76918

Janet 6 1 8.106056

Janet 6 2 8.10354

Janet 6 3 8.482492

Janet 6 4 8.154287

Janet 7 1 7.111584

Janet 7 2 7.903323

Janet 7 3 6.939069

Janet 7 4 5.344401

Janet 8 1 7.485183

Janet 8 2 7.817825

Janet 8 3 6.845474

Janet 8 4 6.899052

Bob 1 1 8.23373

Bob 1 2 8.057511

Bob 1 3 9.661005

Bob 1 4 8.094992

Bob 2 1 8.087004

Bob 2 2 8.088673

Bob 2 3 8.220318

Bob 2 4 9.147579

Bob 3 1 8.864142

Bob 3 2 8.061816

Bob 3 3 8.925117

Bob 3 4 8.976143

Bob 4 1 8.056059

Bob 4 2 8.084911

Bob 4 3 8.348538

Bob 4 4 8.548607

Bob 5 1 8.069039

Bob 5 2 8.007888

Bob 5 3 9.626642

Bob 5 4 9.733307

Bob 6 1 8.024802

Bob 6 2 8.005744

Bob 6 3 8.434947

Bob 6 4 8.453447

Bob 7 1 7.999767

Bob 7 2 7.984759

Bob 7 3 7.365404

Bob 7 4 7.656814

Bob 8 1 7.882578

Bob 8 2 7.758508

Bob 8 3 4.55524

Bob 8 4 7.540994

Frank 1 1 9.481814

Frank 1 2 8.193099

Frank 1 3 8.398606

Frank 1 4 11.7037

Frank 2 1 9.949564

Frank 2 2 8.107129

Frank 2 3 9.163364

Frank 2 4 10.6489

Frank 3 1 9.327477

Frank 3 2 8.012667

Frank 3 3 8.249733

Frank 3 4 8.265833

Frank 4 1 9.037129

Frank 4 2 8.011473

Frank 4 3 9.851276

Frank 4 4 9.407426

Frank 5 1 9.109919

Frank 5 2 8.015916

Frank 5 3 9.295068

Frank 5 4 8.891632

Frank 6 1 8.259335

Frank 6 2 8.0005

Frank 6 3 8.595918

Frank 6 4 8.371596

Frank 7 1 7.938315

Frank 7 2 7.985911

Frank 7 3 7.619531

Frank 7 4 7.396689

Frank 8 1 7.448111

Frank 8 2 7.781902

Frank 8 3 5.067086

Frank 8 4 5.147675

Janet 1 1 8.421558

Janet 1 2 8.049732

Janet 1 3 8.737006

Janet 1 4 9.225913

Janet 2 1 9.93779

Janet 2 2 8.790311

Janet 2 3 10.30329

Janet 2 4 12.84334

Janet 3 1 9.853942

Janet 3 2 8.112191

Janet 3 3 8.553654

Janet 3 4 14.68811

Janet 4 1 8.088195

Janet 4 2 8.035893

Janet 4 3 8.689999

Janet 4 4 8.565889

Janet 5 1 8.90175

Janet 5 2 8.082496

Janet 5 3 12.3517

Janet 5 4 9.292084

Janet 6 1 8.042006

Janet 6 2 8.031552

Janet 6 3 8.23479

Janet 6 4 8.092882

Janet 7 1 5.905284

Janet 7 2 7.99629

Janet 7 3 7.978668

Janet 7 4 7.983601

Janet 8 1 7.768969

Janet 8 2 7.910555

Janet 8 3 5.9105

Janet 8 4 6.014394

Bob 1 1 8.680909

Bob 1 2 8.090483

Bob 1 3 8.846608

Bob 1 4 8.216097

Bob 2 1 9.23454

Bob 2 2 8.072461

Bob 2 3 9.365295

Bob 2 4 8.66944

Bob 3 1 8.700653

Bob 3 2 8.021997

Bob 3 3 10.73211

Bob 3 4 8.270761

Bob 4 1 8.621898

Bob 4 2 8.221656

Bob 4 3 8.873718

Bob 4 4 10.14723

Bob 5 1 8.612726

Bob 5 2 8.013137

Bob 5 3 8.854139

Bob 5 4 10.05516

Bob 6 1 8.238818

Bob 6 2 8.002169

Bob 6 3 8.283516

Bob 6 4 8.060694

Bob 7 1 7.811573

Bob 7 2 7.972366

Bob 7 3 7.550927

Bob 7 4 7.347237

Bob 8 1 7.552504

Bob 8 2 7.821391

Bob 8 3 6.153354

Bob 8 4 5.286279

Frank 1 1 8.521943

Frank 1 2 8.054066

Frank 1 3 8.235211

Frank 1 4 8.187873

Frank 2 1 9.711441

Frank 2 2 8.05149

Frank 2 3 10.82414

Frank 2 4 10.43218

Frank 3 1 11.1396

Frank 3 2 8.341912

Frank 3 3 8.197568

Frank 3 4 10.77953

Frank 4 1 9.186246

Frank 4 2 8.231934

Frank 4 3 9.107861

Frank 4 4 9.336874

Frank 5 1 8.156753

Frank 5 2 8.033916

Frank 5 3 11.35433

Frank 5 4 9.261594

Frank 6 1 8.309988

Frank 6 2 8.03288

Frank 6 3 8.337066

Frank 6 4 8.167594

Frank 7 1 7.240201

Frank 7 2 7.947292

Frank 7 3 6.137034

Frank 7 4 7.598853

Frank 8 1 5.868138

Frank 8 2 7.896514

Frank 8 3 5.148703

Frank 8 4 6.364151