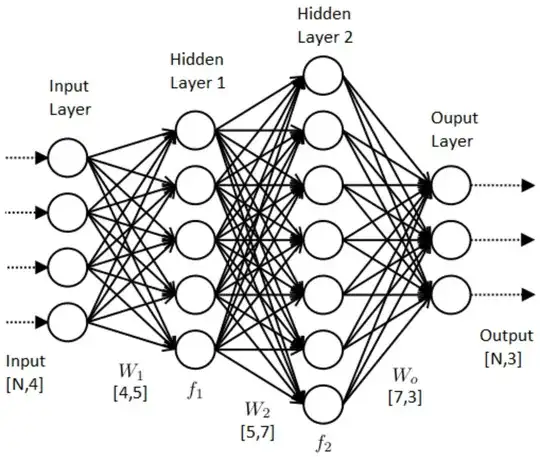

Often you see diagrams like these.

Which makes me wonder what is the definition of a layer, because as it is drawn, it is very vague.

For example, when people say a fully connected layer, what do they mean by a layer?

From the diagram, it seems that:

The input layer is a set of components associated with your input vector, as well as the value of the weights going to the hidden layer, i.e., let your input vector $x = (x_1, \ldots, x_n)$, then the input layer is the set $$L_i = \{x_1, \ldots, x_n\} \cup \{w_{ij}\},$$ where $w_{ij}$ are the weights associated with the input layer.

The hidden layer as well as the output layer consists of a set of activation functions $a_i$ which transforms the input $x$ into a set of outputs $o_i$, along with the weights going into the next hidden layer,i.e., $$L_H = \{a_i\} \cup \{o_i\} \cup \{w_{ij}\} $$.

Is my interpretation correct?