Simpler case: When the probability for the occurance of the event is independent of the jackpot

The geometric distribution relates to the case that the probability of the jackpot is constant, e.g. this 10% remains the same for each draw.

- The probability that the jackpot is won in the first draw is 10%.

- The probability that the jackpot is won in the second draw is the probability to miss it in the first case (90% probability) and then get it in the second case (10% probability). Which is computed as $0.9 \times 0.1$.

- The probability that the jackpot is won in the thirdnd draw is the probability to miss it in the first and second case (two times 90% probability) and then get it in the third case (10% probability). Which is computed as $0.9 \times 0.9 \times 0.1$.

- etcetera

When $p$ is the (constant) probability that the jackpot is won in any draw, then the general formula is:

$$\text{P(win in the $k$-th draw)} =

\overset{{\substack{\llap{\text{probability to win the $k$-th}}\rlap{\text{ draw, if we get there}} }}}{\overbrace{p}^{}}

\times \underset{{\substack{\llap{\text{probability to get}}\rlap{\text{ to the $k$-th draw}} \\ \llap{\text{ie. probability of no }} \rlap{\text{win in the first k-1 draws}}}}}{\underbrace{(1-p)^{k-1}}_{}}$$

When the probability for the occurrence of the event is not independent

For a non constant probability that the jackpot is won, say $p_k = 0.05 + 0.05 k$, you get a formula with a product term over all those $p_k$:

$$\text{P(win in the $k$-th draw)} = p_k \prod_{i=1}^{k-1} (1-p_k) $$

If we write this product term explicitly:

$$ \begin{array}{}

\text{P(k=1)} &=& p_1 &=& 0.1 \\

\text{P(k=2)} &=& p_2 \times (1-p_1) &=& 0.15 \times 0.9 \\

\text{P(k=3)} &=& p_3 \times (1-p_2) \times (1-p_1) &=& 0.2 \times 0.85 \times 0.9 \\

\text{P(k=4)} &=& p_4 \times (1-p_3) \times (1-p_2) \times (1-p_1) &=& 0.25 \times 0.8 \times 0.85 \times 0.9 \\

\text{P(k=n)} &=& p_n \prod_{i=1}^{n-1} (1-p_i)

\end{array}$$

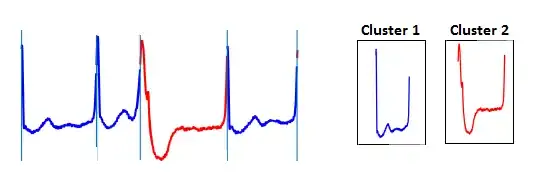

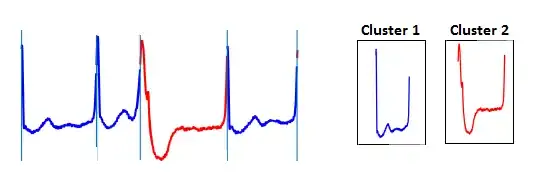

A graph of a computation for your specific example is plotted below:

R-code to replicate this:

r = 20

b = 2/r

a = 1/r

n <- r-b*r

pk <- b+a*c(0:n)

qk <- c(1,head(cumprod((1-pk)),-1))

plot(1:(n+1),pk * qk, ylim = c(0,0.17),

xlab = "draw number k",

ylab = "prbability")

text(1:12+c(rep(0,2),-0.5,0.5,rep(0,8)),(pk * qk)[1:12],paste0(round(10000*pk * qk)[1:12]/100, " %"), pos=3, cex = 0.5)

sum(pk*qk)

Expectation value

For the expectation you sum over all those probabilities multiplied with $k$. $$E(K) = \sum k P(K=k)$$

An elegant way to compute the expectation value would be to use the cumulative distribution function and integrate over it.

We have $$P(K\leq k) = 1- \prod_{1\leq i \leq k} (1-p_i)$$

and for a range $1 \leq k \leq n$

$$E(K) = \sum_{k=1}^{n} 1-P(K\leq k) = \sum_{k=1}^{n} \prod_{1\leq i \leq k} (1-p_i) $$

If your real-life example has a very large range and the summation is not easy to perform, then we can use an approximation. For instance, say we have $p_k = b + a\cdot (k-1)$ with $\frac{a}{1-b}$ very small (other scenarios for $p_k$ are possible as well and this method can be generalized*).

With some handwaving we could say that

$$ \frac{\partial P(K > k)}{\partial k} \approx - P(K > k) p_i $$

or

$$ \frac{\partial \log(P(K > k)) }{\partial k} \approx -p_i $$

and integrating this, then taking the exponent, and inverting the equality gives:

$$P(K \leq k) = 1 - e^{-0.5 a k^2 - b k + c}$$

where $c$ is an integration constant that can be set to make $P(K \leq 1) = b$. We can relate this to a $\chi$ distribution with 2 degrees of freedom

$$P(X \leq x) = 1 - e^{-x^2/2}$$

It would be possible to make the conversion to that $\chi$ distributed variable by scaling and shifting but it is easier to compute $P(X \leq x)$ and take the difference or derivative.

This is done with the code below and it provides the the blue and green lines in the above figure:

k <- c(1:(n+1))

Fk <- 1-exp(-0.5*a*k^2-b*k+0.5*a+b)*(1-b)

ks <- seq(1,n+1,0.1)-0.5

dk <- exp(-0.5*a*ks^2-b*ks+0.5*a+b)*(1-b)*(b+a*ks)

lines(k,diff(c(0,Fk)),col=4)

lines(ks+0.5,dk,col=3)

* A generalization could be made roughly with something like $P(K = k) \approx p_k e^{-\sum_{i=0}^{k-1} p_k}$ where we define $p_0 = 0$. Note that this is a bit analogous to the $f(t) = s(t)e^{\int_0^t-s(t) dt}$ expression in https://stats.stackexchange.com/a/354574/164061