Over here and here, the leave-one-out (LOOCV) formula uses Sherman-Morrison formula in its derivation. Deriving the leave-$k$-out would require the general formula by Woodbury, as you have suspected.

Here I use subscript $k$ as the indices for the rows to be left out from the training set, $(k)$ as the whole vector or matrix without the rows from $k$, and $[k]$ for submatrix containing only rows and columns from $k$. For convenience, the following matrices are defined

$$

\begin{aligned}

A &= X^TX \\

A_{(k)} &= X_{(k)}^TX_{(k)} \\

A_k &= X_k^TX_k \\

H &= XA^{-1}X^T \\

H_{[k]} &= X_kA^{-1}X_k^T

\end{aligned}

$$

$H$ is the so-called hat matrix, while $H_{[k]}$ is a submatrix of $H$. The original residual and the individual leave-$k$ out errors are described by

$$

\begin{align}

e_k&=z_k-X_k\hat\beta \\

e_{(k)}&=z_k-X_k\hat\beta_{(k)}\tag{1}\label{ek}

\end{align}

$$

where

$$

\hat\beta_{(k)}=A_{(k)}^{-1}X_{(k)}^Tz_{(k)}\tag{2}\label{beta1}

$$

We have the following identities

$$

\begin{align}

A_{(k)}=A-A_k \\

X_{(k)}^Tz_{(k)}=X^Tz-X_k^Tz_k \tag{3}\label{Xzk}

\end{align}

$$

From Woodbury identity we have the following

$$

\begin{aligned}

A_{(k)}^{-1}&=A^{-1}+A^{-1}X_k^T(I-X_k^TA^{-1}X_k)^{-1}X_kA^{-1}\\

&=A^{-1}+A^{-1}X_k^T(I-H_{[k]})^{-1}X_kA^{-1}

\end{aligned}

$$

Left multiplying $X_k$ gives us

$$

\begin{aligned}

X_kA_{(k)}^{-1}&=X_kA^{-1}+H_{[k]}(I-H_{[k]})^{-1}X_kA^{-1} \\

&=(I-H_{[k]})(I-H_{[k]})^{-1}X_kA^{-1}+H_{[k]}(I-H_{[k]})^{-1}X_kA^{-1} \\

&=(I-H_{[k]})^{-1}X_kA^{-1}

\end{aligned}

$$

Substituting $\eqref{Xzk}$ into $\eqref{beta1}$, we have from the above equation

$$

\begin{aligned}

X_k\hat\beta_{(k)}&=(I-H_{[k]})^{-1}X_kA^{-1}(X^Tz-X_k^Tz_k) \\

&= (I-H_{[k]})^{-1}(X_k\hat\beta - H_{[k]}z_k) \\

&= (I-H_{[k]})^{-1}(X_k\hat\beta - H_{[k]}z_k)

\end{aligned}

$$

This is finally inserted into the leave out formula $\eqref{ek}$ to get

$$

\begin{aligned}

e_{(k)} &= z_k-(I-H_{[k]})^{-1}(X_k\hat\beta - H_{[k]}z_k) \\

&= (I-H_{[k]})^{-1}\left[(I-H_{[k]})z_k-X_k\hat\beta+H_{[k]}z_k\right]\\

&= (I-H_{[k]})^{-1}(z_k-X_k\hat\beta) \\

&= (I-H_{[k]})^{-1}e_k

\end{aligned}

$$

which can be calculated by solving the following matrix equation to avoid the costly matrix inversion

$$

(I-H_{[k]})e_{(k)} = e_k

$$

Unlike the LOOCV formula which had only scalars, solving the above may not be as cheap, depending on the size of $k$.

Extra note regarding $H_{[k]}$: $H$ can be very big. If you have $n$ points, the size is $n\times n$. Fortunately, you don't need the whole matrix to calculate the submatrix. If $\hat\beta$ is written as follows

$$

\begin{aligned}

\hat\beta &= Mz \\

M &= A^{-1}X^T \\

\end{aligned}

$$

Then $M$ is typically determined by solving the following system

$$

AM = X^T

$$

The size of $B$ is $n\times m$, where $m$ is the number of bases. From here you can compute

$$

H_{[k]} = X_{k}M_k

$$

where $M_k$ are the columns from $M$ with the indices in $k$.

Another method is to use eigendecomposition on $X^TX$

$$

X^TX = PDP^T

$$

so that its inverse can calculated as

$$

(X^TX)^{-1} = PD^{-1}P^T

$$

Defining $Q=XP$, and $Q_{[k]}$ its $k$ rows, the hat matrix and its partial submatrix can be calculated as

$$

\begin{aligned}

H &= XPD^{-1}P^TX^T = QD^{-1}Q^T \\

H_{[k]} &= Q_{[k]}D^{-1}Q_{[k]}^T

\end{aligned}

$$

Of course, calculating eigendecomposition can still be expensive, and so is solving for $e_{(k)}$.

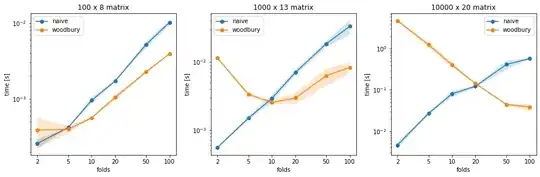

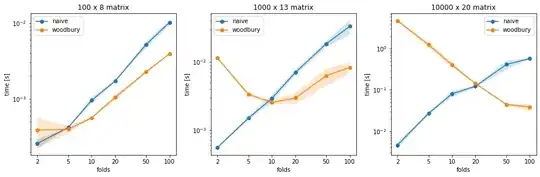

Therefore, this method is only worthwhile if the number of folds is large enough

compared to the data points. If the data is large, it might be better to stick with

the naive method for your typical 10-fold CV.

Here are some plots comparing the two approaches, for several folds and design matrix sizes.

(The code for the benchmark can be found here)