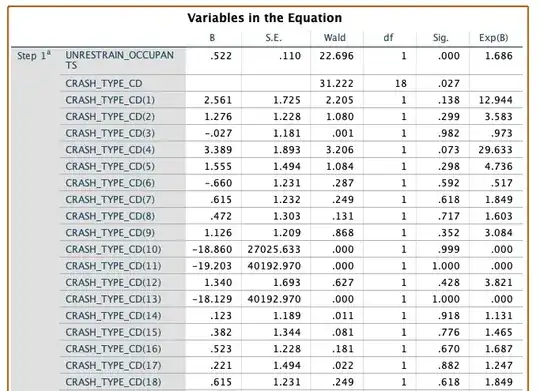

I am calculating rates, which can take any value between 0 and 1. Can it be normally distributed even though the domain is not the real numbers?

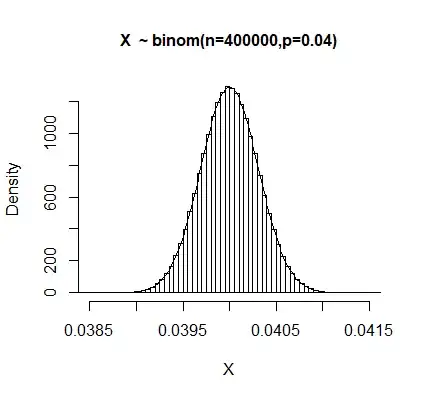

Thank you very much for the answers, here I represent the means of the data which are fitted a normal distribution on. I created something like 1000 means of the data using bootstrapping.

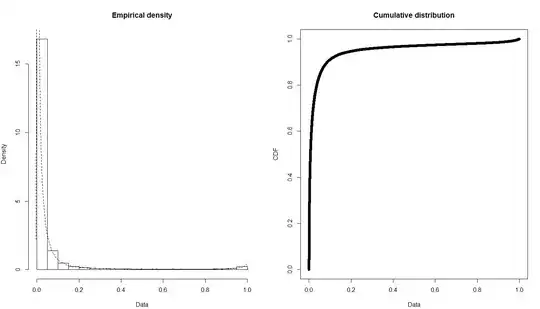

As for the raw data, it is indeed heavily skewed with a large positive skewness value. Based on your answers, the normality for t-test can't be assumed 100%. Instead of t-tests, I'm trying to calculate confidence intervals. I have one confidence interval for the prediction using bootstrapping, although I'm not a 100% sure this is the correct way. I'm comparing 4 predictive models to decide what gives the best results. Individual predicted rates are grouped by the age of the policy and taken their average, so the predictions are for example: for the age=4 the rate = 4.2%. I want to use another method for the CI, namely the Chebyshev's inequality. But for this I need to fit a distribution to the data. I already tried weibull, beta, gamma but none of them seem to work.

EDIT: The model I created predicts individual rates and I take the average of these rate to get the mean rate for a group. That mean has to be estimated correctly, also be assigned a CI to it. I figured that if I perform a t-test on every group between the model predictions and the actual values that need to be predicted (test dataset) and I get not significant p-values, then the model is good. I needed this information regarding the possible normality of the values because of the t-test.

Thank you very much for all the information you've give me so far! You are great!