If the response is count valued, you should consider using an appropriate modeling strategy that implicitly log-transforms the intensity rather than the count values themselves. Consider that a Poisson process with low intensity is likely to have right skewed results and many 0s, but log-transforms of the data would lead to highly biased estimates of the mean and of the SD, regardless of how the 0 values are handled. Fitting negative binomial or quasipoisson GLM as probabilistic models via MLE is a simple, straightforward, and efficient way to summarize count data where applicable.

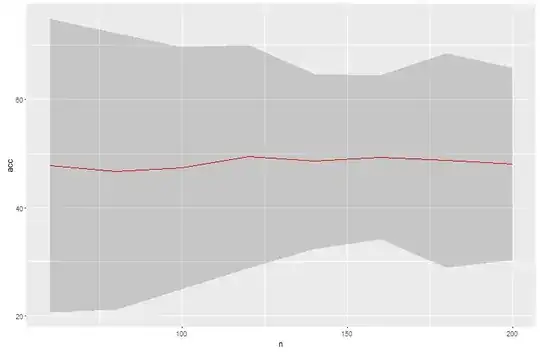

A log-transform alternative that has been explored for variables with 0 values is logp1, where 1 is added to every value before applying log transform, and not just to the 0 value. This will decrease the intensity of the truncation, but not satisfactorily. In other words, your approach is not justified regardless of the distribution's appearance post hoc. The resulting scatterplot is evidence of this.

If the response is continuously valued, the distribution has mixture properties and mixture modeling is one approach to estimating a density with better precision that the proposed approach.