One common way of splitting the data is into the 80%,10%,10%. EarlyStopping is used to prevent the model from overfitting. You could also do the "EarlyStopping" by hand.

You could run the model see at what point you overfit and then choose the model from the appropriate epoch (for which you need to save those models while training).

The usage of EarlyStopping just automates this process and you have additional parameters such as "patience" with which you can adapt the earlystopping rules.

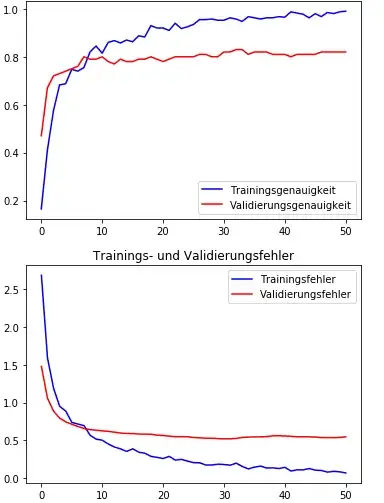

In your example you train your model for too long. You should definitely stop training the latest at epoch 30 where after the validation loss start to increase again. BUt you could already stop at epoch 10 as your loss only improves really slowly.

EarlyStoppping rules just help to automate this detection. But in general you should always stop training when the validation error increases.

It can be helpful to not only split 80:20 (but 80:10:10) because deciding to stop training based on the validation set can also overfit to the validationset.