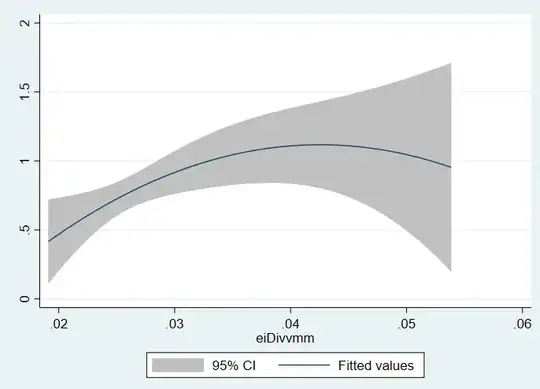

I have a study in which I find a decent correlation:  on a quadratic prediction plot between a binary outcome and a continuous x. However, there are a few observations that have numbers that are not unrealistic, but so much higher than the rest that they are being plotted in a category of their own in which of course the 95% CI is terrible as there is 1-2 observations above 0.035 in the x value. I suspect this may be the reason I am not getting significant p values like I would expect from this relationship.

on a quadratic prediction plot between a binary outcome and a continuous x. However, there are a few observations that have numbers that are not unrealistic, but so much higher than the rest that they are being plotted in a category of their own in which of course the 95% CI is terrible as there is 1-2 observations above 0.035 in the x value. I suspect this may be the reason I am not getting significant p values like I would expect from this relationship.

Would it be incorrect to simply remove these observations? Is there a tool that corrects for these outliers?

CSV file: https://gofile.io/?c=tHrojc

Measurement 1 and 2 are measurements done that are correlated and I believe their ratio may be able to predict the outcome. The ratio is Measurement3.