I trained a model in PyTorch on the EMNIST data set - and got about 85% accuracy on the test set. Now, I have an image of handwritten text from which I have extracted individual letters, but I'm getting very poor accuracy on the images that I have extracted.

One hot mappings that I'm using -

letters_EMNIST = {0: '0', 1: '1', 2: '2', 3: '3', 4: '4', 5: '5', 6: '6', 7: '7', 8: '8', 9: '9',

10: 'A', 11: 'B', 12: 'C', 13: 'D', 14: 'E', 15: 'F', 16: 'G', 17: 'H', 18: 'I', 19: 'J',

20: 'K', 21: 'L', 22: 'M', 23: 'N', 24: 'O', 25: 'P', 26: 'Q', 27: 'R', 28: 'S', 29: 'T',

30: 'U', 31: 'V', 32: 'W', 33: 'X', 34: 'Y', 35: 'Z', 36: 'a', 37: 'b', 38: 'd', 39: 'e',

40: 'f', 41: 'g', 42: 'h', 43: 'n', 44: 'q', 45: 'r', 46: 't'}

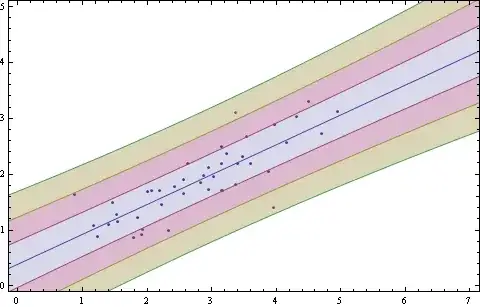

For reference, this is an example of the image used for testing data -

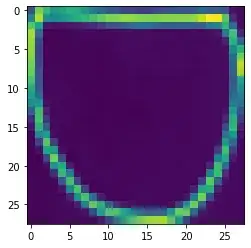

And this is an example of the image I extracted -

How can I debug this?