I am trying to develop my intuition about how to interpret an interaction between a time-varying predictor and time itself.

I have several years of routinely collected outcomes data from a drug and alcohol treatment service. I am interested in modeling the association the effect that amphetamine use has on Opioid use in clients enrolled in an Opiate Treatment Program.

there are four variables in the dataset,

pIDwhich is each client's unique identifieryearsFromStartwhich indicates the number of years from when clients commence treatment. If this variable is 0 it indicates that the measurment was made at the comencement of treatmentatsFactor. This is a categorical variable indicating how many days the client used amphetamines (called ATS or Amphetamine-Type Substances) in the 28 days previous to the day measurment was made. There are three levels of this variable,nowhich means the client used amphetamine on 0 das in the previous 28 days,Lowwhich means the client used amphetamines on 1-12 days in the previous 28 days, andHighwhich indicates the client used amphetamine on 13-28 days in the previous 28 days. 'no' use is the reference category.allOpioid. This is a continuous variable indicating on how many days in the previous 28 days the client used heroin.

Every client has outcomes data collected at commencement of treatment (i.e yearsFromStart = 0) but can have any number of follow-up measurements (from 1 to 11 in this dataset). In addition, there is no consistency to when follow-up measurements are made. it is also worth noting that every time frequency of opioid use is measured, frequency of amphetamine use is also measured.

Here is a sample of three clients' data in person-period (i.e. long) format

# pID yearsFromStart atsFactor allOpioids

# 1 10070474 0.6320081 none 0

# 2 10070474 0.1152882 none 23

# 3 10070474 0.0000000 none 28

# 4 10070474 0.6894973 none 0

# 5 11195140 0.1363944 none 3

# 6 11195140 0.2984505 none 2

# 7 11195140 0.7521694 none 1

# 8 11195140 0.5467925 none 2

# 9 11195140 0.0000000 none 28

# 10 11705183 0.1858126 low 1

# 11 11705183 0.0000000 low 8

# 12 11705183 0.1039756 low 6

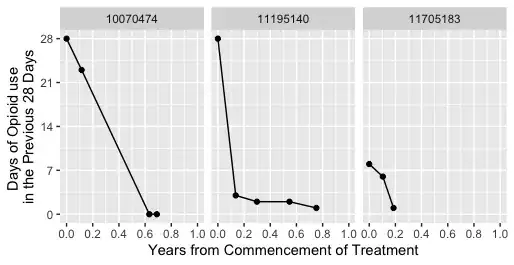

And here is what their opioid use data looks like as a figure

Now I want to model how amphetamine use predicts opioid use over the course of treatment. It is worth making clear that atsFactor is a time-varying predictor and I want to model its impact on frequency of opioid use, and how that impact changes the longer a client is in treatment. Therefore I chose a mixed-effects model with fixed effects yearsFromStart, atsFactor, and and the interaction between yearsFromStart and atsFactor. The model is a random slopes model with each client's trajectory of opioid use over time allowed to vary.

I used the lme() function in the nlme package in R. The model function looks like this

lme(fixed = allOpioids ~ yearsFromStart + atsFactor + yearsFromStart:atsFactor,

random = ~ yearsFromStart | pID,

data = df,

control = lmeControl(optimizer = "opt"),

method = "ML",

na.action = na.exclude))

And this is the output of the model

# Linear mixed-effects model fit by maximum likelihood

# Data: workDF

# AIC BIC logLik

# 18260.86 18319.92 -9120.432

#

# Random effects:

# Formula: ~yearsFromStart | pID

# Structure: General positive-definite, Log-Cholesky parametrization

# StdDev Corr

# (Intercept) 5.673737 (Intr)

# yearsFromStart 4.527000 -0.909

# Residual 5.837775

#

# Fixed effects: allOpioids ~ yearsFromStart + atsFactor + yearsFromStart:atsFactor

# Value Std.Error DF t-value p-value

# (Intercept) 3.109513 0.2616822 1854 11.882785 0e+00

# yearsFromStart -2.189954 0.3421356 1854 -6.400837 0e+00

# atsFactorlow 4.372409 0.5158199 1854 8.476621 0e+00

# atsFactorhigh 8.503671 1.1744451 1854 7.240586 0e+00

# yearsFromStart:atsFactorlow -3.079531 0.8297548 1854 -3.711375 2e-04

# yearsFromStart:atsFactorhigh -7.885443 2.0204646 1854 -3.902787 1e-04

#

# Number of Observations: 2712

# Number of Groups: 853

Inference

Now here is my attempt at interpreting the model.

The predicted number of days of opioid use for people who used no amphetamines in the previous 28 days at start of treatment (i.e.

yearsFromStart = 0)is 3.1.Low amphetamine use is associated with an extra 4.4 days use of opioids at start of treatment compared to no amphetamine use. High Amphetamine use is assoicated with an additional 8.5 days of opioid use.

If the person used no amphetamines in the previous 28 days, a year's treatmemt is associated with 2.2 fewer days of opioid use in the previous 28 days compared to commencement of treatment.

If the person had low amphetamine use in the previous 28 days, a year's treatmemt is associated with 2.2 + 3.1 = 5.3 fewer days of opioid use in the previous 28 days compared to commencement of treatment.

If the person had high amphetamine use in the previous 28 days, a year's treatmemt is associated with 2.2 + 7.9 = 10.1 fewer days of opioid use in the previous 28 days compared to commencement of treatment.

Question 1.

Is this the correct way to interpret a model where there is an interaction with a time-varying predictor and time?

If my interpretation is correct, would it then be true to say then that longer time in treatment reduces the impact of amphetamine use on concurrent opioid use? And furthermore would it be true to say that the extent to which time in treatment buffers the effect of amphetamine use on opioid use is greater the more amphetamines are used?

I don't want to overinterpret these results so it's important to me that I understand the implications of the results correctly.

Prediction

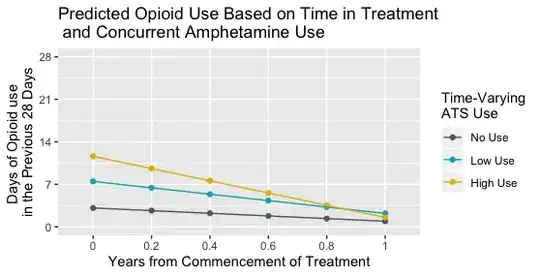

I went further and generated some predictive plots from the model, using the ggeffects package and its ggpredict function (see the answer to this post). I asked this function to predict opioid use for each of the three groups, no amphetamine use, low amphetamine use, and high amphetamine use, at six time points, start of treatment (yearsFromStart = 0), 0.2 years from start of treatment, 0.4 years, 0.6 years, 0.8 years, and 1.0 years.

This is what the predictive graph looks like.

Question 2

Now I am more used to interaction plots where there is an interaction between a time-invariant predictor and time, so that each line represents the average trajectory for some group where the group characteristic does not change, e.g. whether a person was male or female, whether a person's amphetamine use at baseline only was none, low or high. That makes sense to me.

But I am having trouble intuiting a plot like this. The issue of course is that with these data many people's amphetamine use may change over a year. So are these lines predictions of opioid use three hypothetical clients whose amphetamine use stayed the same across the year? If not then what does the figure show? Is it predicted Opioid use in the previous 28 days at each timepoint (0 years from start of treatment, 0.2 years from start of treatment, 0.4, 0.6, 0.8, and 1 years from treatment) for people whose frequency of amphetamine use was no, low, and high at that timepoint only?

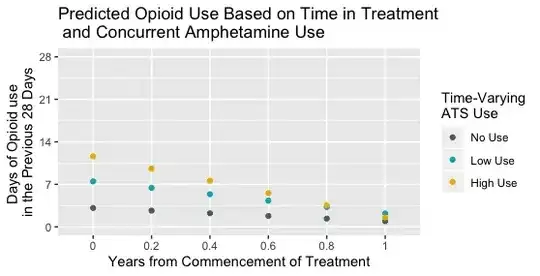

Would it be better to remove the lines in that case and just have the dots only, like this?

To me the lines imply some sense of continuity or consistency in amphetamine use over time, some kind of marginal opioid use trajectory for a person who represents an average participant of some kind.

Any help would be much appreciated. No one at my work has any experience with with models interacting time-varying coefficients with time.