Hello, all. When it comes to calculating the average from some time-spanning date, let's say the average of 20 weekly sales records from a specific store - while also calculating the standard deviation of said average value - is it possible to scale these two estimators to another time period?

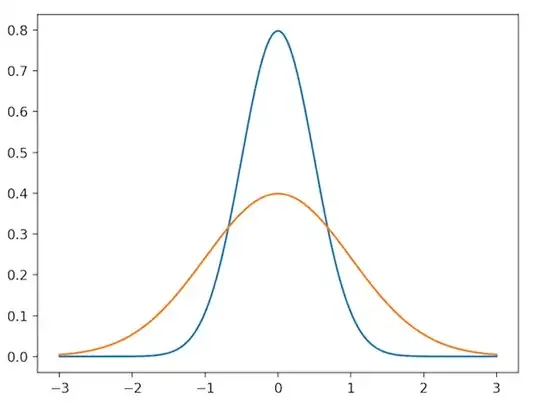

Essentially, what I am asking is whether non-Normal distributions can be added together, like in the Gaussian case.

In Finance, for example, scaling $\sigma$ between time periods, under Gaussian assumptions, is a simple calculation - just multiply the original by square root of the next time period.

(image lightly relevant)