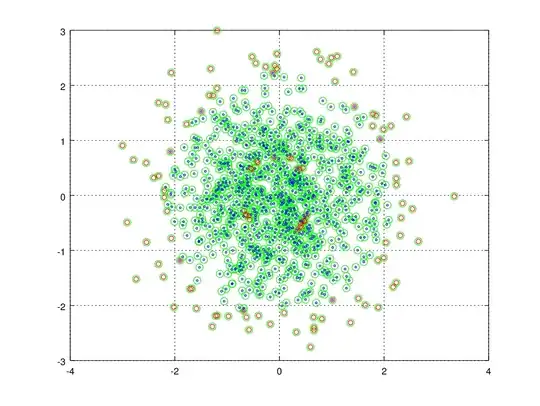

I am struggling with choosing metric that I will use to compare models performance and hiperparam search. My task is similar to fraud detection.

I have found out that many people states PR is better curve than ROC for comparing performance on imbalanced data (absolutely my case). I will run probably run hundreds of experiments so I would rather stick to one metric with one number.

That is why I am looking for AUCs of PR and ROC. I found out that imbalanced data has actually bigger influence on PR AUC than ROC AUC. Do you think I should use ROC AUC or FPR at given recall point or there is something wrong with my plots? Is PR curve better tha ROC curve for imbalanced data but it is opposite with their AUCs?

Scores obtained by sampling from different Gaussian dist with mean = 0.1 for class 1 and mean=1 for class 1. Scores Histogram: green- scores for class 1, blue scores for class 0