I tried to build a neural net for learning XOR. The design is as follows:

1st layer: compute linear function of input 4:2 with 2:2 weights and adding 1:2 bias.

2nd layer: apply sigmoid to all elements in 4:2 matrix from layer 1.

3rd layer: compute linear function of the 2nd layer's output with 2:1 weights

final layer: apply sigmoid to 4:1 vector from the previous layer

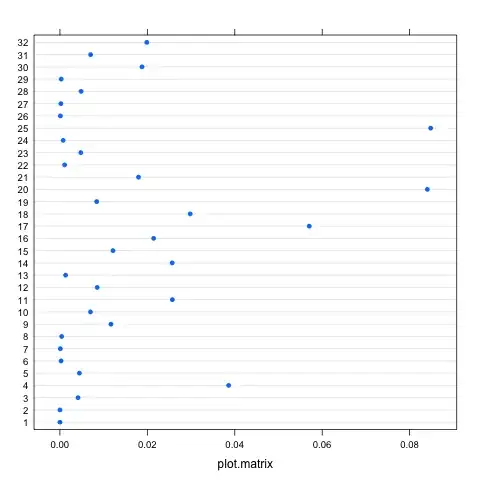

The model converges well after as much as 1,000,000 of iterations (I don't know if it's too big of a number for such a simple problem.

I get the following plot (X - iterations, Y - cost). It seems a little bit strange. Why it stays on the same cost value for so long? Could anyone explain is it a normal graph for such a problem and is anything wrong with my setup that causes such cost behavior? Thanks