I am referring to the Training / Validation / Test set for choosing a model while taking care of overfitting.

Here is how the argument goes:-

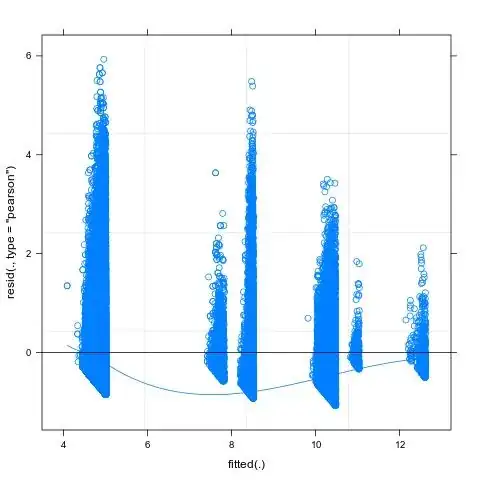

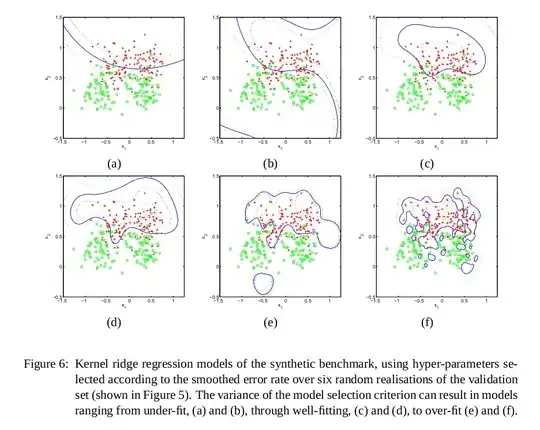

We train various models on the Training set. (This one is easy) Clearly if there is any noise in this data set, as we add features to the different models we will overfit to the noise in the Training set. This is clear to me.

Then we choose the best model on the Validation set. This will overfit the Validation set. This is not clear to me.

Because we have overfit the best model on the Validation set, to get a sense of the true error which the best model makes we should evaluate the best model on the test set.

My query is : When we are doing (2) we may overfit the validation set only if the validation set has the same noise as the Training set. However, we randomly shuffled the points and put them in the Training / Validation / Test set. It's very unlikely that the Training and Validation set have the same noise ( I think this phenomenon is called twinning). That is why I think we will not overfit the Validation set.

Also another instance when the Validation set can be overfit is if we have a HUGE number of high variance models, then when we choose the best one on the validation set, it will overfit to the noise in the validation set. Suppose I have only say 10 models, then this is also unlikely.

That is why I think that we don't need a test set. I think I have misunderstood this topic. Can someone please clarify where I am wrong?

My apologies for the delay in responding. I would like to clarify my query. We may aim to find the global optimum when using the validation set, however the contours of the functions which have been fit to the training set are not free to learn the noise in the validation set. That is what I am not convinced about. Can you please give me an example, where we overfit the training set and then overfit the validation set ? I'll give you one example. Suppose we are doing k-Nearest neighbours and every item in the training/validation set occurs exactly 2 times. Then we will overfit the training and validation sets and get k=1. The nearest neighbour will perfectly predict any chosen point. However, in this example, we have "twinning". The SAME noise exists in the training and validation set. Can you show me an example where we overfit the training and validation sets but without twinning.