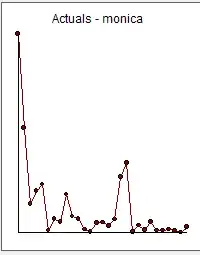

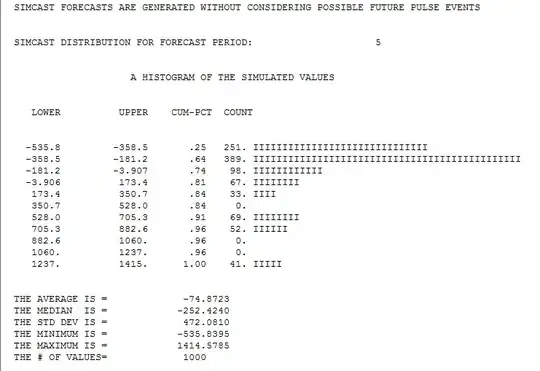

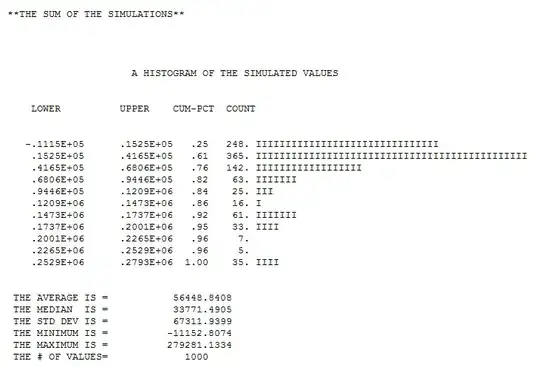

I am trying to predict the total sum of donations that Monica will receive on https://www.gofundme.com/f/stop-stack-overflow-from-defaming-its-users/

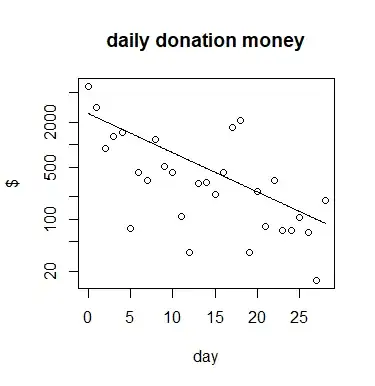

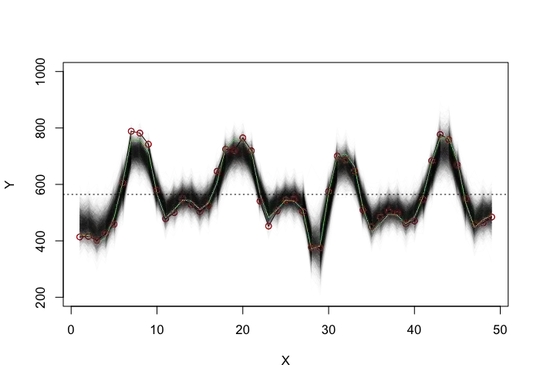

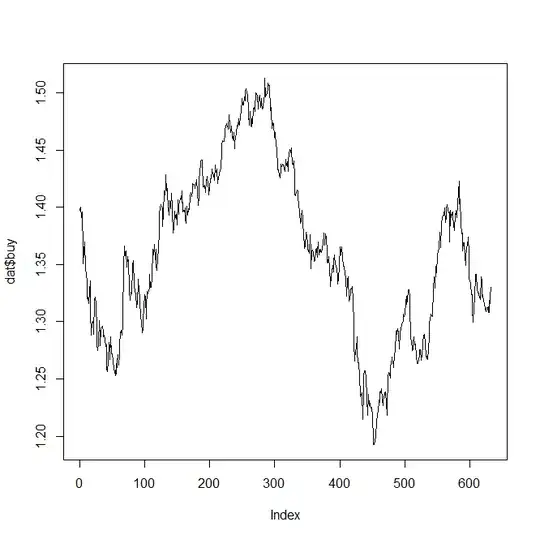

I copied the data and summed for all days the amount of donations. This results in the following data, plot and analysis:

# data

# note that the date values are day since beginning of crowd funding

# the value 6085 is the oldest (day 0) and the value 180 is the most recent (day 28)

m <- c(6085,3207,885,1279,1483,75,421,335,1176,504,430,110,36,299,314,215,417,1712,2141,35,235,80,330,70,70,105,65,15,180)

d <- c(0:28)

# plotting

plot(d,m, log = "y",

xlab = "day", ylab = "$",

main="daily donation money")

# adding model line

mod <- glm(m ~ d, family = quasipoisson(link='log'))

ds <- seq(0,28,0.1)

lines(ds,exp(coef(mod)[1]

+coef(mod)[2]*ds))

# integral for fitted line

exp(coef(mod)[1])/-coef(mod)[2]

When I integrate the fitted line until infinity then I get roughly ~ 21650 dollars as the total sum of money that will be donated.

My question is

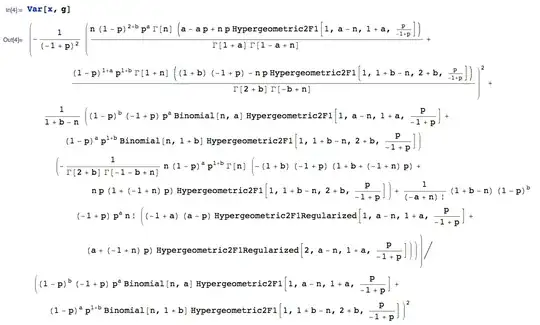

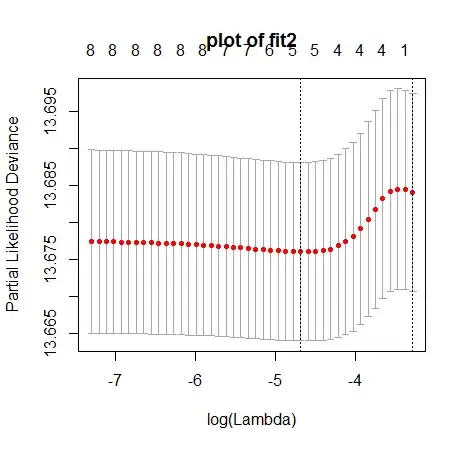

- How can I express the accuracy/variance of this predicted/forecasted value (based on the idea that the model is true)?

How do I incorporate the knowledge that the current sum of the data $\sum m=22309$ is already larger than the prediction/forecast based on the integral of the fitted line?

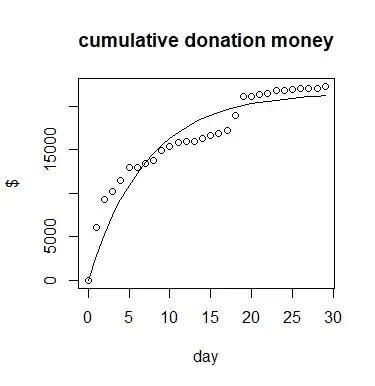

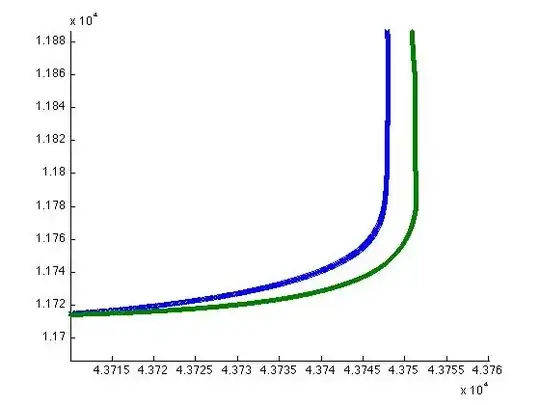

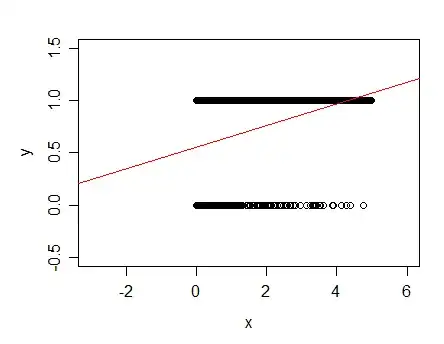

I imagine I could try fit the integral which is something like $\text{final sum} \times ( 1-e^{-ct})$ but I would not know how to treat the errors which will be correlated. And also I still get a small value (in the case below with simple least squares I get the final sum is 21580

t <- c(0,rev(d+1)) ms <- cumsum(c(0,rev(m))) plot(t,ms, xlab = "day", ylab = "$", main="cumulative donation money") mod2 <- nls(ms ~ tot * (1-exp(c*t)), start = list(tot =22000, c = -0.1)) lines(t,coef(mod2)[1] * (1-exp(coef(mod2)[2]*t)))

How should I handle the inaccuracies of my statistical model (In reality I do not have a perfect exponential curve and neither (quasi)Poisson distribution of errors, but I do not know well how to describe it better and how to incorporate these inaccuracies of the model into the error of the prediction/forecast)?

Update:

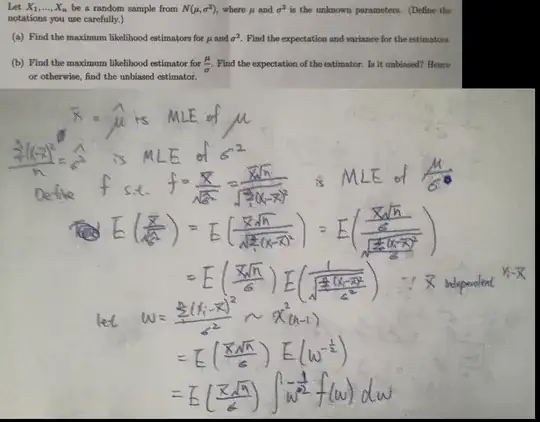

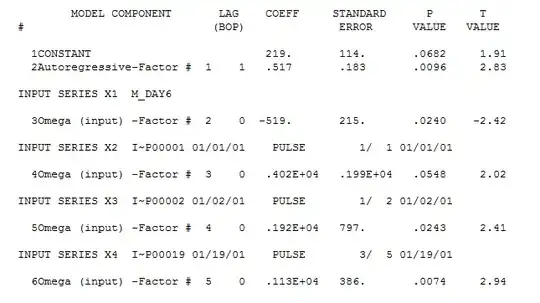

Regarding the questions 1 and 2

IrishStat commented that

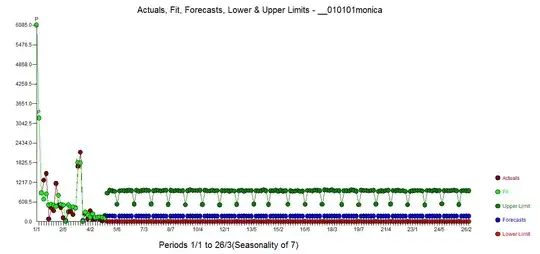

"you might want to accumulate predictions"

So what I did wrong is integrating the estimated values from day 0 to day infinity. But what I should do instead is integrate the estimated values from day 28 onward and add it to the current sum.

So what remains from question 1 and 2 is how to do this for the specific GLM model. If I sum predictions then I need to incorporate errors due to the data being random and due to my estimates being random. How can I add these sources of error together? Can I calculate or estimate this with a short formula or should estimate the error with a simulation?

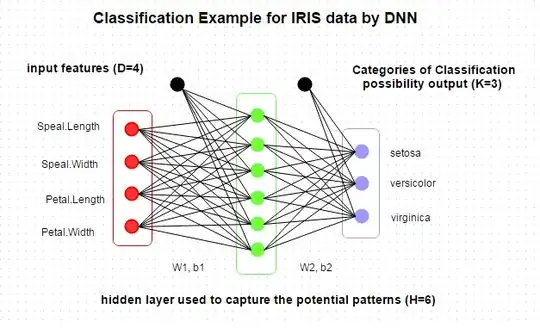

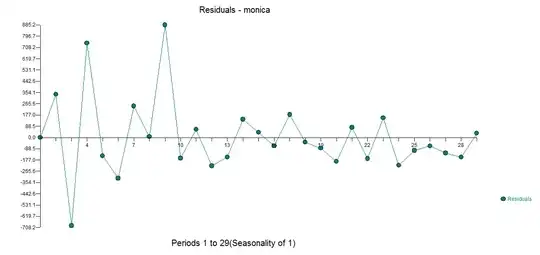

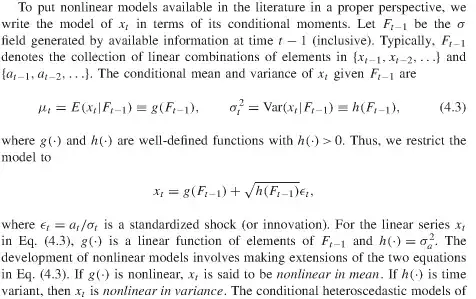

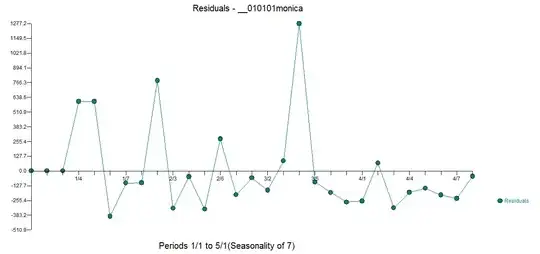

In addition question 3 remains. (IrishStat seems to suggest that I should treat it as an arima process, but how do I do this with the log-link function and quasi(Poisson) errors? )

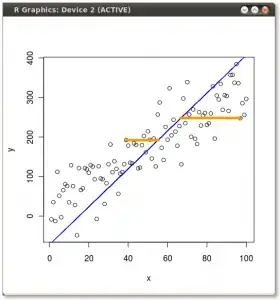

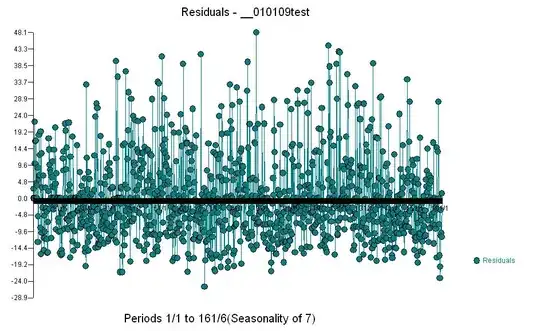

In this graph I have colored all Sundays, there seems to be a weekly pattern.