I am choosing parameter vectors $\beta$ and $\nu$ to minimize an expression of the form:

$$-\log{L(Y;X\beta,\nu)}+\frac{1}{2}\lambda {(\beta - \beta_0 )}^{\top} {(\beta - \beta_0 )}$$

where $\lambda$ is a regularization parameter, $\beta_0$ is a fixed constant, $L(Y;X\beta,\nu)$ is the likelihood of the observation vector $Y$ given $X\beta$ and $\nu$.(The actual likelihood is messy. However, it is the case that $\mathbb{E}Y=X\beta$.)

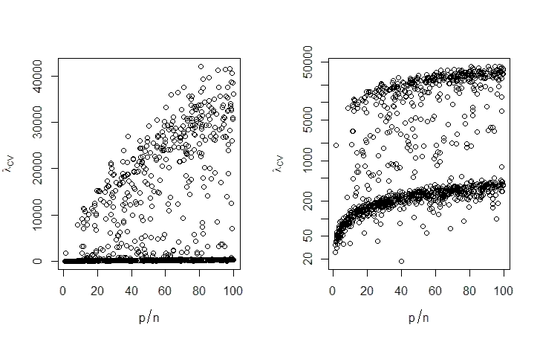

I have to solve many problems of this form. While it is computationally feasible to choose $\lambda$ by K-fold cross validation on an example problem, it is not computationally feasible to re-optimize $\lambda$ for every different $X$.

How should I scale $\lambda$ as the dimensions $n\times p$ of $X$ vary?

Does it matter that in my particular application I am optimizing subject to the constraints that $\beta\ge 0$ and $\beta^\top 1_p = 1$ (where also $\beta_0^\top 1_p = 1$)?

An answer to this question (partly clarified below) suggests that for linear regression, it may be optimal to have $\lambda=O_p(p)$ (on the order of $p$, in probability) as $p\rightarrow \infty$. If I've understood correctly, is it reasonable to assume this generalizes to non-Gaussian likelihoods?