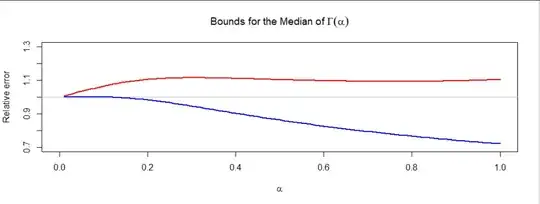

The other answer by whuber gives some nice simple bounds for the median. In this answer I will give an alternative closed form approximation based on finite Newton iteration to the true quantile. My answer uses the lower incomplete Gamma function $\gamma$ (so perhaps you consider that cheating), but it does not require the inverse function. in nay case, the quantile equation $F(x) = p$ can be written as:

$$\begin{align}

p = F(x)

= \frac{\gamma(\alpha, x)}{\Gamma(\alpha)}.

\end{align}$$

We can rewrite this as the implicit equation $H(x|p,\alpha)=0$ using the function:

$$H(x|p,\alpha) \equiv \Big[ p \Gamma(\alpha) - \gamma(\alpha, x) \Big] e^x.$$

The first and second derivatives of this function are:

$$\begin{align}

\frac{dH}{dx}(x|p,\alpha)

&= H(x|p,\alpha) - x^{\alpha-1}, \\[12pt]

\frac{d^2 H}{dx^2}(x|p,\alpha)

&= H(x|p,\alpha) - x^{\alpha-1} - (\alpha-1) x^{\alpha-2}. \\[6pt]

\end{align}$$

The second order Newton equation is:

$$x_{t+1} = x_t - \frac{H(x_t|p,\alpha)}{H(x_t|p,\alpha) - x_t^{\alpha-1}}

\Bigg[ 1 + \frac{H(x_t|p,\alpha) (H(x_t|p,\alpha) - x^{\alpha-1} - (\alpha-1) x^{\alpha-2})}{2 (H(x_t|p,\alpha) - x^{\alpha-1})^2} \Bigg].$$

Unless I am mistaken, the initial power series here is valid for all $\alpha>0$ so it should work for the range of values of interest in your question. If you start at a point reasonably close to the true quantile (e.g., one of the bounds that whuber gives in his answer), we would expect fairly rapid convergence to the true quantile. Thus, a valid approximation to the true quantile would be to run this Newton iteration some finite number of steps.

Testing this iterative method: Here is a simple example to confirm that the iterative method is working. Suppose we consider the median of the distribution $\text{Gamma}(2, 1)$. We will start the iterative procedure at the upper-bound approximation in whuber's answer, which is:

$$x_0 = 2 \log 2 = 1.678347.$$

In the code below we will use $m = 4$ iterations of the Newton method, which gives the tiny approximation error of $-2.220446 \times 10^{-16}$. This code uses second-order Newton, but it is possible to get quite a good approximation even with the first order approximation.

#Define the implicit function

H <- function(x, p, alpha) {

H <- gamma(alpha)*(p - pgamma(x, alpha, 1))*exp(x);

attr(H, 'gradient') <- H - x^(alpha-1);

attr(H, 'Hessian') <- attributes(H)$gradient - (alpha-1)*x^(alpha-2);

H; }

#Set the parameters

alpha <- 2;

p <- 0.5;

#Perform m Newton iterations

m <- 4;

x <- rep(NA, m+1);

x[1] <- alpha*log(2);

for (t in 1:m) {

HHH <- H(x[t], p, alpha);

HHD <- attributes(HHH)$gradient;

HDD <- attributes(HHH)$gradient;

x[t+1] <- x[t] - HHH/HHD*(1 + HHH*HDD/(2*HHD^2)); }

#Here is the approximation

x[m+1];

[1] 1.678347

#Here is the true median

qgamma(p, alpha, 1);

[1] 1.678347

#Here is the approximation error

x[m+1] - qgamma(p, alpha, 1)

[1] -2.220446e-16

Anyway, I'm not sure if this type of approximation is useful for your purposes, but it does involve a finite number of iterations. Obviously it uses the evaluation of the incomplete Gamma function, so it is not "closed form". It may be possible to create a closed form version by using a closed form approximation to the incomplete gamma function, and that would allow you to start at the approximation given by whuber, and then iterate towards the true median.