Let $X_1, X_2, \cdots X_n$ be iid with mean $\mu$ and variance $\sigma^2$. Lets look at the class of estimators

$$S^2_j = \frac{1}{n-j}\sum_{i=1}^n(X_i- \bar X)^2$$

Using this notation, $S_1^2$ is the usual sample variance and $S_0^2$ is the variant where we divide by the sample space.

The sample variance is unbiased for $\sigma^2$

The derivation of this fact is fairly straightforward. Let's start by finding the expected value of $S_j^2$ for all $j$.

\begin{align}

E(S_j^2) &= \frac{1}{n-j}E\left(\sum_{i=1}^n(X_i- \bar X)^2 \right) \\

&= \frac{1}{n-j}E\left(\sum_{i=1}^nX_i^2 - n\bar X^2\right) && \text{"short-cut formula"} \\

&= \frac{1}{n-j}\left(\sum_{i=1}^nE(X_i^2) - nE(\bar X^2)\right) \\

&= \frac{1}{n-j}\left(\sum_{i=1}^n(Var(X_i) - E(X_i)^2) + n(Var(\bar X) + E(\bar X)^2)\right) \\

&= \frac{1}{n-j}\left(n(\sigma^2 + \mu^2) - n(\sigma^2/n + \mu^2)\right)\\[1.2ex]

&= \frac{n-1}{n-j}\sigma^2.

\end{align}

The bias for this class of estimators is therefore

$$B(S_j^2) = E(S_j^2) - \sigma^2 = \frac{j-1}{n-j}\sigma^2$$

which is clearly equal to $0$ if (and only if) $j=1$.

MSE under normality

Mean squared error is a popular criteria for evaluating estimators which considers the bias-variance tradeoff. Lets consider the case where $X_1, \cdots X_n \stackrel{\text{iid}}{\sim} N(\mu, \sigma^2)$. Under normality, we can show that

$$\frac{(n-j)S_j^2}{\sigma^2} \sim \chi^2(n-1).$$

The expected value (and hence the bias) is the same as before. The chi-square result provides an easy way of calculating the variance for this class of estimators.

Since the variance of a $\chi^2(v)$ RV is $2v$, we have that

$$\text{Var}\left(\frac{(n-j)S_j^2}{\sigma^2}\right) = 2(n-1).$$

We also have that

$$\text{Var}\left(\frac{(n-j)S_j^2}{\sigma^2}\right) = \frac{(n-j)^2}{\sigma^4}\text{Var}(S_j^2).$$

Putting these pieces together implies that $$Var(S_j^2) = \frac{2\sigma^4(n-1)}{(n-j)^2}.$$

Therefore the MSE of $S_j^2$ is

$$MSE(S_j^2) = B(S_j^2)^2 + Var(S_j^2) = \sigma^4\left(\frac{2(n-1) + (j-1)^2}{(n-j)^2} \right)$$

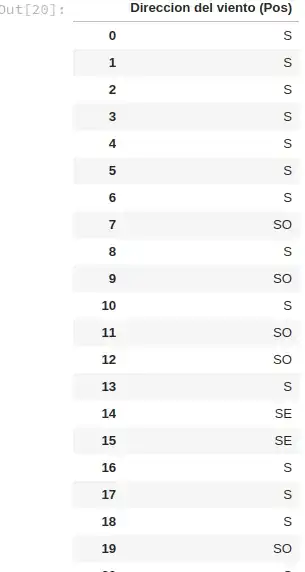

Here is a plot of the MSE as a function of $j$ for $\sigma = 1$ and $n=30$.

According to MSE, the method of moments (divide by $n$) estimator $S_0^2$ is preferable to the sample variance $S_1^2$. The truly surprising result here is that the "optimal" estimator according to MSE is

$$S_{-1}^2 = \frac{1}{n+1}\sum_{i=1}^n(X_i- \bar X)^2.$$

Despite this result, I've never seen anybody use this as an estimator in practice. The reason this happens, is that MSE is exchanging bias for a reduction in variance. By artificially shrinking the estimator towards zero, we get an improvement in MSE (this is an example of Stein's Paradox).

So is the sample variance a better estimator? It depends on your criteria and your underlying goals. Although dividing by $n$ (or even, strangely, by $n+1$) leads to a reduction in MSE, it is important to note that this reduction in MSE is negligible when the sample size is large. The sample variance has some nice properties including unbiasedness which leads to its popularity in practice.