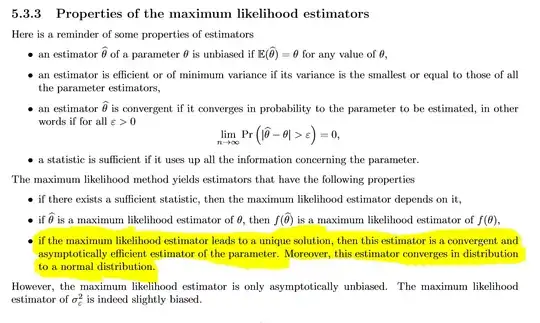

- A property of the Maximum Likelihood Estimator is, that it asymptotically follows a normal distribution if the solution is unique.

- In case of a continuous Uniform distribution the Maximum Likelihood Estimator for the upperbound is given through the maximum of the sample $X_i$.

I have a hard time figuring out how the distribution of the maximum converges in distribution to a Gaussian.

In the following Question it is claimed that the maximum of the sample $X_i$ of a $U[0,\theta]$ , with $\theta$ = 1, will follow a Beta distribution. Question about asymptotic distribution of the maximum

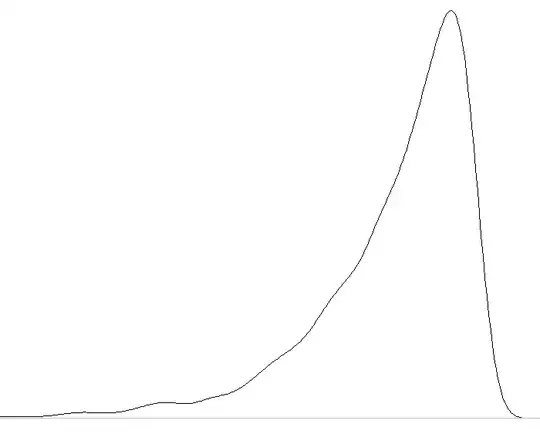

I also tried to figure it out empirically and always came to a more or less the result in the Graph bellow. Also from a logical point of view (atleast my logic) the distribution should never be able to converge to a Gaussian since the Expected Value of $\hat\theta$ is asymptotically equal to $\theta$ and because all possible $X_i$ have to be smaller than $\theta$, therefore there can not exist Values on the right side of $E[\hat\theta]$, which makes it impossible to converge to a Normal Distribution.

Where do I make my mistake? I haven't found a similiar question considering the contradiction.