Gauss-hermite (GH) quadrature is a very efficient method compared to the Monte Carlo method (MC). If you care about the trade off between precision versus speed (here measured by the number of function evaluations) GH is far superior to MC sampling for the specific integral here under consideration.

The software I use for solving the integral by Gauss-Hermite procedure approximates the integral

$$(1) \ \ \int_{-\infty}^\infty \exp\left(-z^2\right)g(z) dz$$

as a weighted sum

$$\approx \sum_i w(z_i)g(z_i)$$

The procedure is to select the number of points $N$ here referred to as the sample size. The software will then return $w_1,...,w_N$ weights and $z_1,...,z_N$ nodes. These nodes are plugged into the function $g()$ to get $g_i = g(z_i)$ and then the user computes $$\sum_i w_i g_i.$$

To apply the procedure the first step is therefore to rewrite the integral $\int_{-\infty}^\infty \exp\left(-z^2\right)g(z) dz$ to the integral under consideration - up to a known constant of proportionality - which can be done by letting $$z = (x-\mu)/(\sqrt{2v})$$ and by letting $$g(z) = \log(1+\exp(\mu +\sqrt{2v}z)).$$ By substitution it follows that

$$(2) \ \ \int_{-\infty}^\infty \exp\left(-z^2\right)g(z) dz = \frac{1}{2v}\int_{-\infty}^\infty \exp\left(- \frac{(x-\mu)^2}{2v} \right)\log(1+\exp(x))dx$$

since the R code approximates the integral on the left hand side the result has to be multiplied with the constant $2v$ to get the result the OP is looking for.

The integral is then solved in R by the following code

library(statmod)

g <- function(z) log(1+exp(mu + sqrt(2*v)*z))

hermite <- gauss.quad(50,kind="hermite")

sum(hermite$weights * g(hermite$nodes))*sqrt(2*v)

The method is here no different than the MC method because the MC method also presuppose that the integral under consideration can be written on a certain form more precisely

$$\int_{-\infty}^{\infty}h(x)f(x) dx$$

where $h(x)$ is a distribution it is possible to sample from. In the current case this is particularly easy because the OP's problem can be written as

$$\sqrt{2\pi v}\int_{-\infty}^\infty\frac{1}{\sqrt{2\pi v}} \exp\left(- \frac{(x-\mu)^2}{2v} \right)\log(1+\exp(x))dx$$

The OP's problem is then given as

$$\sqrt{2\pi v} \ \mathbb E[\log(1+\exp(x))] \approx \sqrt{2\pi v} \frac{1}{N} \sum_i \log(1+\exp(x_i))$$

for a sample $\{x_i\}_{i=1}^N$ where $x_i$ is a random normal draw of mean $\mu$ and variance $v$. This can be done using the following R code

f <- function(x) log(1+exp(x))

w <- rnorm(1000,mean=mu,sd=sqrt(v))

sqrt(2*pi*v)*mean(f(w))

Rough Comparison

To make a rough comparison first note that both the MC method and the GH method makes an approximation of the form

$$\sum_{i=1}^N weight_i \cdot F(s_i)$$

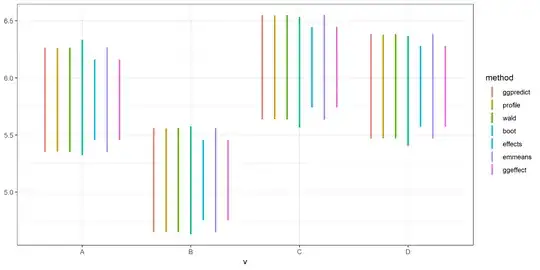

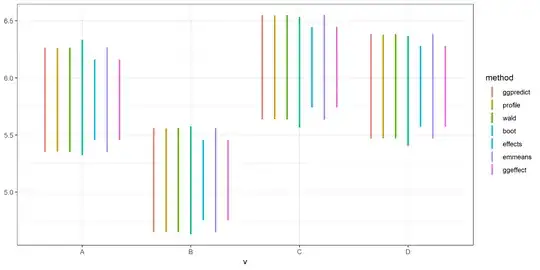

it therefore seems reasonable to compare the methods on the precision they achieve for a given sample size $N$. In order to do this I use the R function integrate() to find the "TRUE" value of the integral and then calculate the deviation from this assumed true value for different sample sizes $N$ for both the MC method and the GH method. The result is displayed in the plot  which clearly show how the GH method gets very close even for a small number of function evaluations as indicated by the red line quickly going to 0 whereas MC integration is very slowly goes to zero.

which clearly show how the GH method gets very close even for a small number of function evaluations as indicated by the red line quickly going to 0 whereas MC integration is very slowly goes to zero.

The plot is generated using the following code

library(statmod)

mu <- 0

v <- 10

f <- function(x) log(1+exp(x))

g <- function(z) log(1+exp(mu + sqrt(2*v)*z))

h <- function(x) exp(-(x-mu)^2/(2*v))*log(1+exp(x))

# Monte Carlo integration using function f

w <- rnorm(1000,mean=mu,sd=sqrt(v))

sqrt(2*pi*v)*mean(f(w))

# Integration using build in R function

int.sol <- integrate(h,-500,500)

int.sol$value

# Integration using Gauss-Hermite

hermite <- gauss.quad(50,kind="hermite")

sum(hermite$weights * g(hermite$nodes))*sqrt(2*v)

# Now wolve for different sample sizes

# Set up the sample sizes

sample_size <- 1:300

error <- matrix(0,ncol=length(sample_size),nrow=2)

for (i in 1:length(sample_size))

{

MC <- rep(0,1000)

for (j in 1:1000)

{

w <- rnorm(sample_size[i],mean=mu,sd=sqrt(v))

MC[j] <- sqrt(2*pi*v)*mean(f(w))

}

error[1,i] <- mean(abs(MC - int.sol$value))

hermite <- gauss.quad(sample_size[i],kind="hermite")

her.sol <- sum(hermite$weights * g(hermite$nodes))*sqrt(2*v)

error[2,i] <- (abs(her.sol - int.sol$value))

}

plot(sample_size,error[1,],ylim=c(0,10),type="l",ylab="error")

points(sample_size,error[2,],type="l",lwd=2,col="red")