You ask four questions.

-

As a first visual test you could check the scatterplot of $(U_i, U_{i-1}).$ The output should fill the unit square almost evenly: in this case we can conclude that $U_i$ is uncorrelated with $U_{i-1}.$ Can we also conclude that $U_i$ is independent of $U_{i-1}$?

No. First, modern pseudorandom number generators (such as those used in most statistical applications) will behave exactly as described, but because they provide deterministic sequences of values (albeit extremely long ones), they cannot be truly independent.

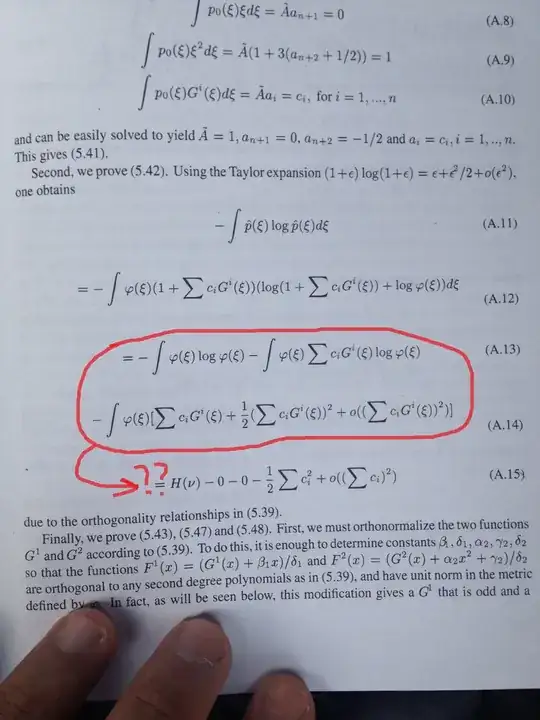

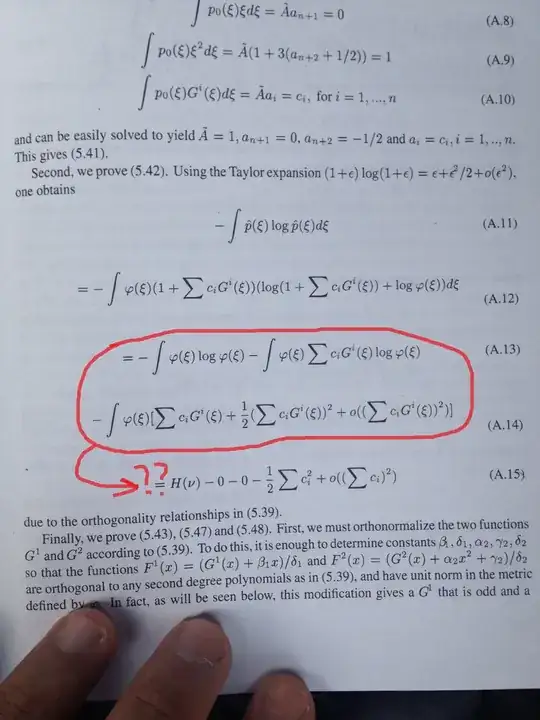

This argument will not convince some people, who will (rightly) argue that the lack of independence is so slight that it couldn't possibly matter. Allow me, then, to exhibit an example involving a sequence of just three uniform random variables $(U_1,U_2,U_3)$ that behave as described but are demonstrably not independent. Here is a scatterplot matrix of the first thousand realizations showing how the pairs $(U_i,U_{i-1})$ are uniformly filling the unit square:

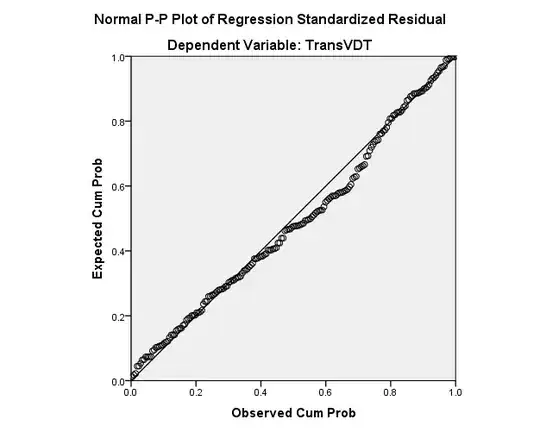

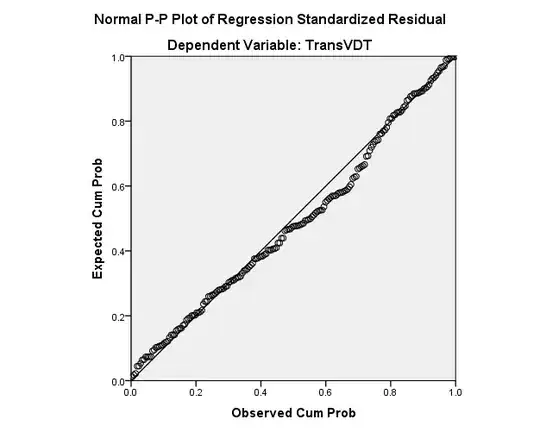

However, the three variables are not independent, as this map of $U_3$ versus $U_1$ and $U_2$ demonstrates:

Here is how the variables were generated. We begin with a set $\Omega$ of integer vectors,

$$\Omega = \{(0,0,0),\ (0,1,1),\ (1,0,1),\ (1,1,0)\},$$

and give it the uniform probability distribution (so each element is chosen with $1/4$ probability).

To create one realization of $(U_1,U_2,U_3),$ take an infinite sequence $(\omega_i),i=1,2,\ldots,n,\ldots$ of independent draws from $\Omega.$ Writing $\omega_{ij}$ for component $j$ of $\omega_i,$ set

$$U_i = \sum_{j=1}^\infty \omega_{ij}2^{-j}.$$

In effect, for each $j$ the sequence $(\omega_{ij})$ is a random string of zeros and ones which is interpreted as the binary representation of a number between $0$ and $1.$ It is obvious--and straightforward to prove--that each $U_i$ has a uniform distribution. (See Method #5 in my post at https://stats.stackexchange.com/a/117711/919 for more explanation and a simulation.)

Note, however, that the elements of $\Omega$ enjoy an unusual property: any two components of $\omega\in\Omega$ determine the third. (The third equals $1$ when the other two are not equal and otherwise the third equals $0.$) Thus, because almost all possible $U_i$ uniquely determine the sequence of $\omega_{ij}$ in their binary representations, with probability $1$ each is a function of the other two. Consequently, the distribution of (say) $U_3$ conditional on $U_1$ and $U_2$ is a constant, rather than being uniform. This is as far from independence as one can possibly get!

See the function predict3 in the appendix (below) for how the third of the $U_i$ is computed from the other two: you just represent the two values in binary, work out the corresponding binary representation for the third one, and convert that to a number.

-

Why if all the sequences should be mutually independent, then $U_i$ vs $U_{i-1}$ should span the unit square almost evenly?

Independence means the joint distribution function of $(U_i,U_{i-1})$ is the product of the marginal distribution functions. Having a uniform distribution means the chance that $U_i$ lies in an interval $[a,b]\subset[0,1]$ is $b-a.$ Thus, the chance that $(U_i,U_{i-1})$ lies within a rectangle $[a,b]\times[c,d]\subset [0,1]^2$ equals $(b-a)(d-c),$ which is the area of that rectangle. Thus, for rectangles at least, the chances are equal to the areas: they are uniform. A limiting argument is needed to show the distribution is truly uniform in the sense that the chance $(U_i,U_{i-1})$ lies in any arbitrary set $A\subset[0,1]^2$ of area $a$ is precisely $a.$ See https://stats.stackexchange.com/a/256580/919 for an example of how such arguments go.

-

If also the autocorrelation function signals no autocorrelation at any lag, what can we conclude? (That all the pairs $U_i, U_j$ with $i$ different from $j$ are not correlated?)

Yes. That's because the sequence $U_1,U_2,\ldots,U_n,\ldots$ is stationary: the distributions of $(U_i,U_j)$ and $(U_{i+s},U_{j+s})$ are the same for any positive integer $s.$

-

Since the elements of the sequence $U_1, U_2, \ldots$ should be mutually independent in order to be iid, should we check also the correlation between all the combinations (3-tuple, 4-tuple, ... N-tuple) and not only two consecutive pairs?

Yes. But even that's not enough: a generalization of the construction in the answer to question $(1)$ (changing from $3$ to $N+1$ components) provides an example of what can go wrong. But as a practical matter, such checks are an excellent idea: they are the basis for most procedures to check random number generators.

Appendix

This R code illustrates the calculations and produces the figures.

#

# Draw a sequence of `n` vectors from Omega.

#

rb3 <- function(n) {

z <- matrix(c(1,1,0, 0,1,1, 1,0,1, 0,0,0), 3, 4, dimnames=list(c("x1", "x2", "x3")))

z[, sample.int(4, n, replace=TRUE), drop=FALSE]

}

#

# Generate (U[1], U[2], U[3]) up to double precision.

#

ru <- function(nbits=52) {

rb3(nbits) %*% (1/2)^(1:nbits)

}

#

# From two components (x,y) of (U[1], U[2], U[3]), predict the third.

#

predict3 <- function(x,y, nbits=52) {

#--Convert a float between 0 and 1 into its binary representation

to.binary <- function(z) {

a <- integer(nbits)

for (i in 1:nbits) {

z <- 2*z

a[i] <- floor(z)

z <- z - a[i]

}

a

}

#--Convert a binary representation into a float between 0 and 1.

from.binary <- function(a) sum(a * (1/2)^(1:nbits))

from.binary(to.binary(x) != to.binary(y))

}

#

# Conduct a simulation of (U[1], U[2], U[3])

#

set.seed(17)

U <- t(replicate(1e3, ru())[,1,])

# sum((U[,3] - mapply(predict3, U[,1], U[,2]))^2) # Compares U[,3] to its predictions

#-- Scatterplot matrix

pairs(U, col="#00000040", labels=paste0("U[", 1:3, "]"))

#

# The plot of U[3] vs. (U[1], U[2]).

#

library(ggplot2)

b <- 8 # Number of bits in the values

x <- seq(0, 1, length.out=2^b+1)

x <- x[-length(x)]

X <- expand.grid(U1=x, U2=x)

# Compute U[3].

# X$U3 <- apply(as.matrix(X), 1, function(u) predict3(u[1], u[2], b+1)) # Long...

# -- Alternative (instantaneous):

library(bitops)

X$U3 <- with(X, bitXor(2^b*U1, 2^b*U2)) / 2^b

names(X) <- paste0("U", 1:3)

ggplot(X, aes(U1, U2)) +

geom_raster(aes(fill=U3)) +

scale_fill_gradientn(colors=rainbow(13)[1:10]) +

xlab(expression(U[1])) + ylab(expression(U[2])) +

guides(fill=guide_colorbar(expression(U[3]))) +

coord_fixed() +

ggtitle(expression(paste(U[3], " depends on ", U[1], " and ", U[2])))