Let's step back a bit and go to the primary use of cross validation: validation or verification, i.e. measuring predictive performance of a model you trained on some data at hand for the purpose of using it for prediction. No decision yet what that estimate is to be used for (if you like: no hyperparameter tuning, the model is final as it is). Also, for the moment we'll say that the blue Test data part of All data does not exist/is not available.

So we train a model on the training data and need to estimate its predictive performance. Cross validation now runs the training procedure $k + 1$ times:

- on the whole Training Data,

- and on each of the $k$ folds.

These runs are independent of each other in the sense that results of previous runs are completely "forgotten" for the next run.

The resulting models of course are not independendent of each other, as they were trained on almost the same training data. And neither should they be:

In order to take the test results for the $k$ surrogate models and use them as approximation for the generalization error of the final model,

we assume that the $k$ so-called surrogate models are equal or equivalent to the final model trained on the whole Training Data since their training data differs only in a negligible way (leaving out $\frac{1}{k}$ of the data points) from the whole Training data.

Now, this assumption often doesn't completely hold: the surrogate models being trained on less data are on average a bit worse than the final model. Thus, the well-known slight pessimistic bias of cross validation.

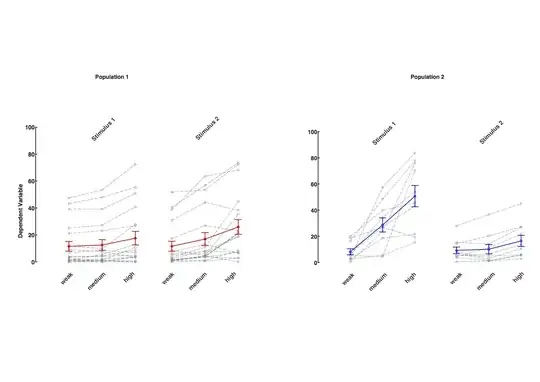

We can use a weaker assumption: the $k$ surrogate models are equal or equivalent to each other, in other words, the models are stable against exchanging a few ($\frac{2}{k-1}$th) of their training data for other cases.

We can check either across the surrogate models (do e.g. their slopes and/or intercepts change noticeably or hardly at all) or with repeated cross validaton (see below) whether that assumption is met, and if not, we say our models are unstable.

However, cross validation is not a good simulation of getting entirely new data sets due to the overlap (= correlation) between the training sets of the folds, so the relevant variance e.g. for algorithm comparison cannot be calculated from cross validation: for that we'd need the training sets to be independent of each other.

When CV is meant to provide more data to train on,

I'd say CV is mostly meant to provide more test data: we pool test results for all cases in the data set.

But none of the CV surrogate models has more training data than a fixed train/test split that reserves $\frac{1}{k}$ for testing. And the CV results are usually taken as approximation for the model trained on the whole data set.

why not just repeating the whole procedure arbitrary times? After performing all splits, you just repeat the entire process again.

As long as your training procedure is completely deterministic, whenever you encounter a split that was already evaluated, you won't get any new results. So repeating makes sense only if you generate new splits - and that is done. However, the new split is still testing cases that have been tested before, just with surrogate model that had slightly different training sample. You can use this to extract information about the stability of the results of the training procedure, but if the bottleneck is the actual number of tested cases it doesn't help.