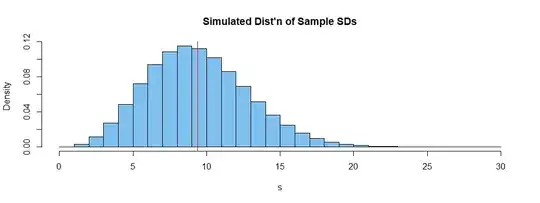

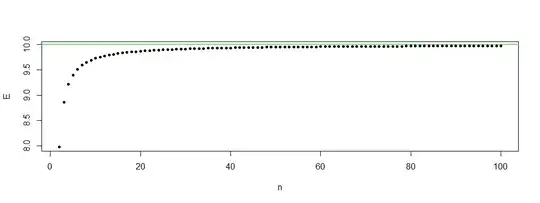

I am having trouble to intuitively understand why the expected value of the unbiased sample variance is not equal to the square of the expected value of the unbiased sample standard deviation.

In other words, it's been shown that for $$s^2 = \frac{1}{N-1}\sum_{k=1}^N \left(x_i -\overline{x} \right)$$ where $\overline{x}=\frac{1}{N}\sum_{i=1}^Nx_i$ is the sample mean, we have : $$E\left[s^2 \right] = \sigma^2$$ and $$E\left[ s \right] = \sigma \sqrt{\frac{2}{N-1}} \frac{\Gamma(N/2)}{\Gamma((N-1)/2)}$$

Now, how come, if I expect to measure a variance, for eg $v=2$, I can't expect to measure a standard deviation of $s = \sqrt{v} = \sqrt{2}$ ? Again, I'm looking for an intuitive explanation (the math-side has been done already).