Let's say for simplicity that each variable $A$, $B$ and $C$ has only two states such that they jointly have $8$ states. As described in the answers to What is the number of parameters needed for a joint probability distribution? you will need $8-1=7$ independent parameters to describe this distribution.

You only have $5$ parameters $P(A)$, $P(B)$, $P(C)$, $P(B \vert A)$ and $P(C \vert A)$. Note that $$P(C \vert A) = P(A \vert C) P(C)/P(A)$$ and $$P(B \vert A) = P(A \vert B) P(B)/P(A)$$ are not independent from the other $5$ parameters.

Counter-example

Let the probabilities $P(A=a \cap B=b \cap C=c)$ be expressed by the numbers $n_{abc} $ with subscripts $a$, $b$, $c$ taking the values $0$ or $1$ depending on whether $A$, $B$, $C$ are true or not. Then we have $8$ numbers, but only $6$ equations to define them.

$$\begin{array}{rcl}

n_{000}+n_{001}+n_{010}+n_{011}+n_{100}+n_{101}+n_{110}+n_{111} &=&1 \\

n_{100}+n_{101}+n_{110}+n_{111} &=& P(A=1)\\

n_{010}+n_{011}+n_{110}+n_{111} &=& P(B=1)\\

n_{001}+n_{011}+n_{101}+n_{111} &=& P(C=1)\\

(n_{110}+n_{111})/(n_{100}+n_{101}+n_{110}+n_{111}) &=& P(B=1 \vert A=1) \\

(n_{101}+n_{111})/(n_{100}+n_{101}+n_{110}+n_{111}) &=& P(C=1 \vert A=1) \\

\end{array}$$

So this will have multiple solutions that can be expressed by two additional numbers. Let $p_a = 1-q_a = P(A=1)$ (and similar for $B$ and $C$)

$$\begin{array}{rcl}

n_{000}=q_a q_b q_c + r + s + t + u \\

n_{001}=q_a q_b p_c + r - s - t - u \\

n_{010}=q_a p_b q_c - r + s - t - u \\

n_{011}=q_a p_b p_c - r - s + t + u \\

n_{100}=p_a q_b q_c - r - s + t - u \\

n_{101}=p_a q_b p_c - r + s - t + u \\

n_{110}=p_a p_b q_c + r - s - t + u \\

n_{111}=p_a p_b p_c + r + s + t - u \\

\end{array}$$

In these equations you can see the expression of the numbers $n_{abc}$ as independent (the product of terms $q$ and $p$) plus extra terms that make the numbers deviate from independence. Those terms are $r$, $s$, $t$ and $u$ which will leave probabilities like $P(A=1)$ invariant (you can see it has the higher dimensional version of this case: https://stats.stackexchange.com/a/363777/164061 ). When we put these $8$ solutions into the before mentioned 6 equations you get:

$$\begin{array}{rcl}

1 &=&1 \\

p_a &=& P(A=1)\\

p_b &=& P(B=1)\\

p_c &=& P(C=1)\\

(p_b p_a + 2 r)/(p_a) &=& P(B=1 \vert A=1) \\

(p_c p_a + 2 s )/(p_a) &=& P(C=1 \vert A=1) \\

\end{array}$$

So you can find counter examples by using different values for the parameters $t$ and $u$ which will render different solutions that still satisfy your conditions $P(A)$, $P(B)$, $P(C)$, $P(B \vert A)$ and $P(C \vert A)$.

For example the two cases below:

$$n_{000}=n_{001}=n_{010}=n_{011}=n_{100}=n_{101}=n_{110}=n_{111} =0.125$$ or $$\begin{array}{rcl}

n_{000}=n_{011}=n_{101}=n_{110}&=&0.1 \\

n_{001}=n_{010}=n_{100}=n_{111} &=&0.15

\end{array}

$$

are an example of two different distributions with the same $P(A) = P(B) = P(C) = P(B \vert A) = P(C \vert A) = 0.5$ and in this particular case also $P(C \vert B) = 0.5$.

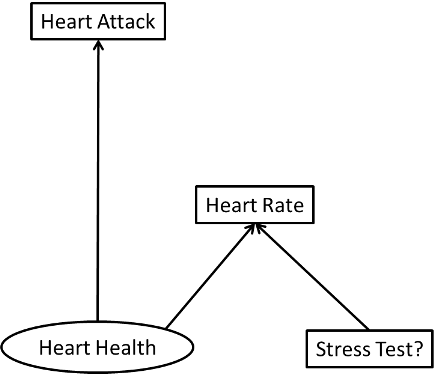

Graphical

The image below sketches a cube which is how I intuitively imagine these variables $r$, $s $, $t $, as a crossed pattern that changes the distribution while leaving the marginal distributions (including some conditional) invariant. And the variable $u $ will leave all marginal distributions invariant (when you take the sum of $n $ allong any rib then you get both a $+u $ and $-u $ term).