I'm measuring a four-categorical nominal variable (attachment style) and another variable in Likert scale (narcissism). How can I find whether there is any correlation between any of the attachment styles and narcissism? Thank you!

-

Maybe you want to see if each level of the nominal scale has the same distribution of Likert scores. – BruceET Sep 23 '19 at 16:19

-

Yes, that's right. And if the difference in the distribution at each level is significant. – Dilyana G. Sep 23 '19 at 18:02

-

Hope examples in my Answer cover what you need. Try to get started and please let me know if you need explanations. Away from computer tomorrow, but back the day after. – BruceET Sep 23 '19 at 21:52

1 Answers

Some experiments. There at least two ways in which Likert scores may differ between two groups.

(a) For example, considering Likert scores as categorical, one group might be 'decisive' with mainly 1s and 5s with

almost no 3s, while the other might have lots of scores between 2 and 4

with almost no 1s or 5s. Medians may not be the best way to summarize the essence of the differences.

(b) For example, considering Likert scores as

ordinal (perhaps nearly 'numerical'), one group might have consistently

low Likert scores 1s and 2a, and the other might have mostly 4s and 5s.

In this case 'median' is meaningful, and the first group will have a lower

median that the other.

In (a), you may find what you're looking for by using a chi-squared test for homogeneity of probabilities of the various scores from one group to another. By contrast in (b), a Kruskal-Wallis test might reveal differences in medians.

I have no idea whether your data are more like (a) or more like (b). Nor how many subjects you have in each of your 'attachment' groups. Nor do I have any idea how many subjects you have.

For illustrations of computations of the two kinds of tests in R, I have simulated some data that may have elements of

both (a) and (b), and that have enough 'subjects' that a chi-squared test

is feasible. For each group, vector p expresses proportions for each of the five Likert responses; R turns these into probabilities. [For example,

c(1,2,3,2,1) becomes probability vector $(1/9,\,2/9,\,3/9,\,2/9,\,1/9).]$

set.seed(923)

a = sample(1:5, 40, rep=T, p=c(1,2,3,2,1))

b = sample(1:5, 43, rep=T, p=c(1,1,2,3,3))

c = sample(1:5, 38, rep=T, p=c(2,3,2,1,1))

d = sample(1:5, 45, rep=T, p=c(3,3,1,1,1))

(a) Chi-squared test. We begin by tabulating the data and putting counts

into contingency table MAT.

a.tab = tabulate(a); b.tab = tabulate(b)

c.tab = tabulate(c); d.tab=tabulate(d)

MAT = rbind(a.tab, b.tab, c.tab, d.tab); MAT

[,1] [,2] [,3] [,4] [,5]

a.tab 5 10 13 9 3

b.tab 5 3 9 14 12

c.tab 5 18 7 3 5

d.tab 21 12 4 3 5

The chi-squared test finds highly significant differences among the distributions of Likert scores for the four groups.

chisq.test(MAT)

Pearson's Chi-squared test

data: MAT

X-squared = 54.488, df = 12, p-value = 2.235e-07

We should look at expected counts in the 20 cells of the table to see that they are all greater than 3 and mostly greater than 5. There are enough subjects that all cells are OK.

chisq.test(MAT)$exp

[,1] [,2] [,3] [,4] [,5]

a.tab 8.674699 10.361446 7.951807 6.987952 6.024096

b.tab 9.325301 11.138554 8.548193 7.512048 6.475904

c.tab 8.240964 9.843373 7.554217 6.638554 5.722892

d.tab 9.759036 11.656627 8.945783 7.861446 6.777108

Pearson residuals can provide clues where to look for important differences.

The sum of the squares of these residuals is the chi-squared statistic, so

residuals with absolute values greater than 2 or 3 may point the way to

cells where observed counts are much different from expected counts (consistent

with the null hypothesis). For example, Group D has more than the expected

number of Likert 1 responses (21 observed, about 10 expected).

chisq.test(MAT)$res

[,1] [,2] [,3] [,4] [,5]

a.tab -1.247655 -0.1122879 1.7902060 0.761138 -1.2321105

b.tab -1.416397 -2.4385567 0.1545311 2.367164 2.1707562

c.tab -1.128977 2.5997919 -0.2016439 -1.412187 -0.3021798

d.tab 3.598323 0.1005728 -1.6535826 -1.733861 -0.6826400

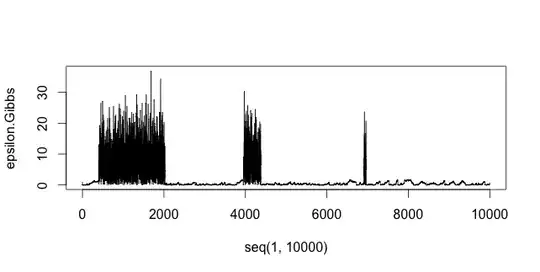

(b) Kruskal-Wallis test. We begin by looking at a boxplot of the Likert scores for the four groups. Samples medians are sufficiently different that we might expect significant differences of the locations of the groups. [Shapes of the distributions of the groups are also somewhat different, so there may be differences other than differences in location.]

There are highly significant differences in locations of Likert scores for the four groups. Ad hoc tests using paired Wilcoxon tests (perhaps at a significance level of 1% to guard against false discovery) would help to establish specifically which groups differ from each other.

kruskal.test(list(a,b,c,d))

Kruskal-Wallis rank sum test

data: list(a, b, c, d)

Kruskal-Wallis chi-squared = 28.284, df = 3, p-value = 3.166e-06

Summary. Because the null hypothesis for the chi-squared test is not at all the same as the null hypothesis for the K-W test, you should think about scenarios (a) and (b) at the beginning of this answer and do whichever test matches your assumptions and goals. (I rigged my simulation so that both tests would give significant results, but that might not be true for your real data.)

- 47,896

- 2

- 28

- 76

-

Thank you so much for this long explanation! The first one will work. – Dilyana G. Sep 25 '19 at 06:49