I have the following data stored in pandas Series:

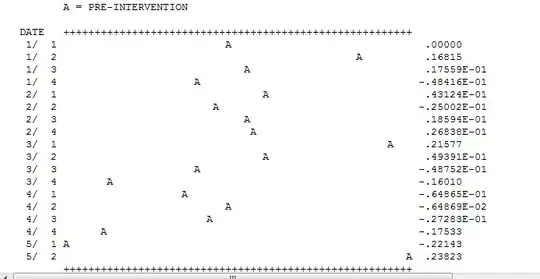

errro

2019-08-06 -0.010112

2019-08-07 0.149606

2019-08-08 0.072981

2019-08-09 -0.028481

2019-08-13 0.016070

2019-08-14 -0.031424

2019-08-15 -0.009823

2019-08-16 0.008425

2019-08-20 0.205810

2019-08-21 0.130842

2019-08-22 -0.002020

2019-08-23 -0.174903

2019-08-27 -0.159731

2019-08-28 -0.094326

2019-08-29 -0.084832

2019-08-30 -0.228481

2019-09-03 -0.341104

2019-09-04 0.066397

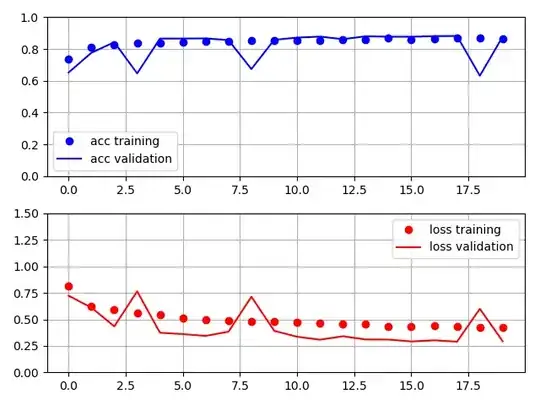

I am using the following code:

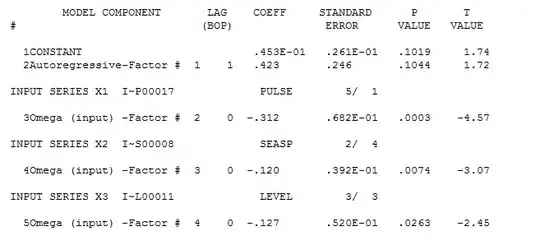

import pmdarima as pm

rs_fit = pm.auto_arima(error.values, start_p=1, start_q=1, max_p=3, max_q=3, m=12,

start_P=0, seasonal=False, trace=True,

n_jobs=-1, # We can run this in parallel by controlling this option

error_action='ignore', # don't want to know if an order does not work

suppress_warnings=True, # don't want convergence warnings

random=True, random_state=42,

n_fits=25)

rs_fit.predict(n_periods=15)

I get the following output: rs_fit.predict(n_periods=15)

Out[10]:

array([ 0.11260974, -0.02270731, -0.02270731, -0.02270731, -0.02270731,

-0.02270731, -0.02270731, -0.02270731, -0.02270731, -0.02270731,

-0.02270731, -0.02270731, -0.02270731, -0.02270731, -0.02270731])

I am not sure I understand the repetitions of errors after step 1.

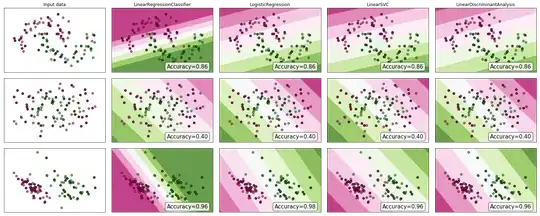

Edit: When I change the above to:

modl = auto_arima(error.values, start_p=1, start_q=1, start_P=1, start_Q=1,

max_p=5, max_q=5, max_P=5, max_Q=5, seasonal=False,

stepwise=True, suppress_warnings=True, D=10, max_D=10,

error_action='ignore')

The results are drastically different:

Out[26]:

array([-0.17272289, -0.18657458, -0.20042626, -0.21427794, -0.22812963,

-0.24198131, -0.255833 , -0.26968468, -0.28353636, -0.29738805,

-0.31123973, -0.32509141, -0.3389431 , -0.35279478, -0.36664646])

Edit2:

What size of error series should I for 15 periods ahead forecast? Is there any guideline to it? And how else can I improve the model fit above ?