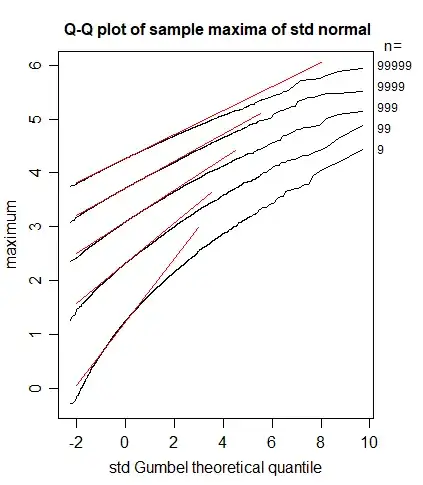

I would like to see the convergence of an order statistic to its respective Extreme Value attractor by simulating with the Metropolis Hastings algorithm (I am self-studying MCMC algos). I was trying with a known case, the Normal maximum convergence to the Gumbel distribution. However I can't get a satisfactory result.

[EDIT following comment]

I'm simulating through the Metropolis Hastings the following random variable

$max(x_1, x_2, ...x_n)$ with each $N(0,1)$

according to Extreme Value Theory - Show: Normal to Gumbel it should converge to the Gumbel distribution

I'm trying to reach this result by sampling with the MH algorithm both the distribution of the maximum and the Gumbel random variable. The distribution of the maximum turns out to be completely off with respect to the Gumbel rv.

I'm computing the acceptance ratio in this way

$\alpha = \min\left(1,\frac{f(x_{proposed})}{f(x_{previous})} \frac{q(x_{previous}|x_{proposed})}{q(x_{proposed}|x_{previous})}\right)$

where $f$ is the density of the order statistic $f^{max}_n(x) = n f(x) F(x)^{n-1}$ and $q$ is the density of the proposal, in my case $N\left(\mu = x_{previous},10\right)$ (I chose this value for the variance to get an acceptance rate of about $10\%$) with $x_0 = 0$

[/EDIT]

I show it below along with the Python code (I attach it to allow to replicate my result)

import numpy as np import matplotlib.pyplot as plt import scipy.stats as st

def MH(func, numsample, cntsample):

accepted_trials = []

scale = 10

floor = lambda x: 10e-14 if x < 10e-14 else x

pdfproposal = lambda x, loc, scale: st.norm.cdf(x, loc = loc, scale = scale)

qproposal = lambda loc, scale: np.random.normal(loc = loc, scale = scale)

counter = 0

for i in range(numsample):

if i == 0:

param_old = qproposal(loc = 0, scale = 1)

param_new = qproposal(loc = param_old, scale = scale)

alpha = min(1, func(param_new) / floor(func(param_old)) * \

pdfproposal(param_old, param_new, scale) / floor(pdfproposal(param_new, param_old, scale)))

u = np.random.uniform()

if alpha > u:

accepted_trials.append(param_new)

param_old = param_new

counter += 1

print(counter, i, sep = ' ')

if counter == cntsample:

break

return accepted_trials

This is the code of my hand-written MH algorithm

n = 9 # distribution of the maximum for a sample size of 9 observation normally distributed

f = lambda x: st.norm.cdf(x)**n

g = lambda x: (f(x + 0.00001) - f(x))/ 0.00001

gumbelpdf = lambda x: np.exp(-x - np.exp(-x))

u = np.random.uniform(size = 50000)

accepted_trials_ordstat = MH(g, 200000, 50000)

accepted_trials_gumbel = MH(gumbelpdf, 200000, 50000)

Here I do feed it with the pdf (taking the numerical derivative makes no difference) of the maximum $f^{max}_n(x) = n f(x) F(x)^{n-1}$. Below the plots.

The blue line is the pdf MH-sampled of the order statistic, the orange line is the MH-sampled Gumbel distribution, the green line is Gumbel rv sampled directly with the inverse of cdf.

Playing with variance is of no help, actually the algorithm seems to be right, because if I compute $P(X \leq 0)$ with $F(x)$ of the maximum I get a value of 1.5% (it shrinks further if I increase $n$), while it's about 36% for the Gumbel. I am confused.